Security Now 938, Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

0:00:00 - Leo Laporte

It's time for security now. Steve Gibson is here, as always, a fact-filled fund tour through the world of security. We'll talk about Steve giving in to pressure, mostly from me, and developing a free app that will test the integrity of your hard drive the details on Validrive coming up in just a little bit. Then we'll also talk about why you've got to be really careful about the browser extensions you use, more careful than anybody ever realized. And finally, apple's response to the demand that they open up their encryption. Steve has all the details coming up. Next on Security Now, podcasts you love From people you trust.

0:00:46 - Steve Gibson

This is TWIT.

0:00:53 - Leo Laporte

This is Security Now with Steve Gibson, episode 938. Recorded Tuesday, september 5th 2023. Apple says no. Security Now is brought to you by DROTA. All too often, security professionals undergo the tedious and arduous task of manually collecting evidence. With DROTA, companies can complete audits, monitor controls and expand security assurance efforts to scale. Say goodbye to manual evidence collection, hello to automation, all done at DROTA speed.

Visit drata.com/TWIT to get a demo and 10% off implementation. And by Panoptica, reduce the complexities of protecting your workloads and applications in a multi-cloud environment. Panoptica provides comprehensive cloud workload protection integrated with API security to protect the entire application lifecycle. Learn more about Panoptica at panopticaapp and by Thinks Canary. Thousands of irritating false alerts help no one. When somebody's in your network, you'll get the single alert that matters For 10% off and a 60-day money-back guarantee. Go to canarytools slash TWIT and enter the code TWIT in the how Did you Hear About Us box. It's time for Security Now, the moment you've been waiting for all week long. Here he is, the man of the hour, the man of the week, mr Steve Gibson. Hello, steve.

0:02:25 - Steve Gibson

Hello Leo. Well, it is great to be with you for the beginning of September, and are we about to change our clocks? We had to wait till after it's.

0:02:34 - Leo Laporte

October. Now right, they keep moving. It has to be after Halloween. Actually it's November Because the sugar industry that's right.

0:02:42 - Steve Gibson

We want to keep the light on for the kitties.

0:02:45 - Leo Laporte

So they said no, please change it after Halloween, please.

0:02:48 - Steve Gibson

I don't think anyone trick-or-treats anymore. They go to malls and like schools and things to do that.

0:02:54 - Leo Laporte

Too much stranger danger out there. That's right, we don't know about the house.

0:02:58 - Steve Gibson

So we have Security Now, episode 938 for this beginning of September, the fifth titled, apple Says no. And of course, you know what we'll be talking about, leo, because you've been talking about it on your previous podcast, but this week we have our first sneak peek at Validrive, which is the freeware I decided to quickly create to allow any Windows user to check any of their USB connected drives. I'm just going to show some screenshots before we begin deeply. There's been another sighting of Google's Topics API. Where was that? Has Apple actually decided to open their iPhone to researchers, and what did some quite sobering research reveal about our need to absolutely positively trust each and every browser extension we install? And really, why was that sort of obvious? In retrospect? We're, then going, to entertain some great feedback from our amazing listeners. I got a bunch of great stuff in the past week, and then we're going to conclude by looking at the exclusive club which Apple's just declared membership has made complete. So, I think, another great podcast for our listeners, and we have a terrific picture of the week too.

0:04:26 - Leo Laporte

That's a very Yoda-like riddle. You just told Apple just decided to complete. Okay, we'll find out. We'll find out. Yoda will tell us what that all is.

But first a word from our sponsor, drota. Is your organization finding it difficult to collect manual evidence and achieve continuous compliance as it grows and scales? As a leader in cloud compliance software, so named by G2?, drota streamlines your SOC2, your ISO 27001, your PCI DSS, your GDPR, your HIPAA and other compliance frameworks, providing 24-hour continuous control monitoring so you can focus on scaling securely. With a suite of more than 75 integrations, DROTA easily integrates through applications like AWS and Azure and GitHub, octa, cloudflare and on and on and on. Countless security professionals from companies including Lemonade and Notion Bamboo HR. They've all shared how crucial it has been to have DROTA as a trusted partner in the compliance process. You can expand your security assurance efforts using the DROTA platform, which allows you to see all of your controls easily map them to compliance frameworks. Among other things, it gives you immediate insight into framework overlap. It also tells you where the gaps are right. Drota's automated dynamic policy templates Support companies just getting started in compliance, using integrated security awareness training programs, automated reminders to ensure smooth employee onboarding and, as the only player in the industry to build on a private database architecture, your data can never be accessed by anyone outside your organization.

All DROTA customers receive a team of compliance experts You're never alone on this including a designated customer success manager, and DROTA's team of former auditors have conducted more than 500 audits. Your DROTA team will keep you on track to ensure there are no surprises, no barriers during your audit. Plus, drota's pre-audit calls will prepare you for when those audits begin Nice to talk to an actual auditor about things you should be thinking about ahead of time. Oh, and you'll love DROTA's audit hub. It really makes all of these audits much more efficient. Faster. You save hours of back-and-forth communication. You'll never misplace crucial evidence. It's all in the hub. You'll share documentation instantly. Auditors love it. You'll love it.

All the interactions and data gathering can occur in DROTA between you and your auditor, so you won't have to switch between different tools or correspondence strategies. With DROTA's risk management solution, you can manage end-to-end risk assessment and treatment workflows. You can flag risks, you can score them and then you get to decide whether to mitigate or accept or transfer or avoid. Drota maps appropriate controls to risks, simplifying risk management and automating the process, and DROTA's trust center provides real-time transparency into security and compliance postures, which improves sales, security reviews and improves your relationships with both customers and partners, because they know you're doing it right. Say goodbye to manual evidence collection. Say hello to automated compliance by visiting drotacom Twitter. Drota Bringing automation to compliance at drotaspeed. That's drotacom. We thank you so much for supporting security now and now. The moment you've all been waiting for. This is a good one. I did peek the picture of the week.

0:08:12 - Steve Gibson

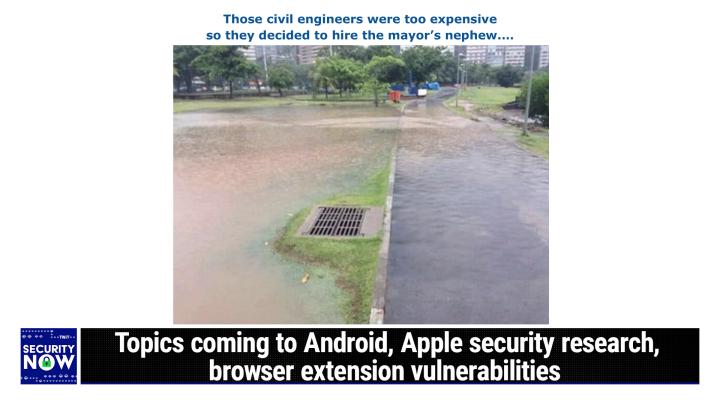

So for those who haven't seen it, I am tweeting the pictures now every week to Twitter. So for those 64,000 some followers, you may have seen it. We've got a picture sort of it looks like sort of a large central park somewhere, and on the left side is a large, wide paved road, sort of running from the like from our foreground way. Well into the distance to the right side is the park, the green grass park, where you can imagine couples and their newborns, you know, picnicking and frolicking. Well, I presume this is not a busted water main, maybe it's just been a long rain, but this is all flooded right. So the road on the right is completely flooded, the water is up over the curb, it's poured into the green grassy area. So this is all flooded, except for in the center. In the center foreground is the drain.

Which is an island of green in that which is above the water level, all the water, just there, it's like. The one dry spot in the entire picture is the drain into which no water is flowing because, as you said, it's an island, and so I gave this picture at the caption. Well, those civil engineers were too expensive, so they decided to hire the mayor's nephew.

0:09:51 - Leo Laporte

I can do it. Yeah, that's not a problem. This is dumb. It's just a park, I'll just put a hole here, simple, yeah, oh yeah, yeah, you get what you pay for.

0:10:01 - Steve Gibson

Wow. So anyway, another great picture, thanks to our great listeners who provided this, and I you know, leo, I was thinking about your reaction during our podcast last week to this problem of bogus mass storage drives and the need for a quick, non-destructive test for those new and existing drives.

0:10:27 - Leo Laporte

When we left the show last week, you said, well, yeah, it'll be in, spin the next, spin, right. And I thought, well, that's reasonable. But I said maybe you could write something. Talk about a quick reaction. I saw your tweet and it was like wow.

0:10:42 - Steve Gibson

Well, in fact it was you were. You were on Mac break week, I mean. Oh no, you were on this week at Google. I happened to have it on the background while I was writing code for this and I, and so I tweeted to you know that I was. I decided to briefly pause work on spin right 6.1, because this just seemed like too big a need and something that I had all the pieces in place to do so. In the show notes I've got four actual screenshots made by what I'm calling valid drive, with the tip of the hat to Paul Holder Excellent name. Excellent came up with excellent. Came up with a name. Yep, and sure enough, I mean what we're learning at. I released it to my testing gang two days ago, on Sunday, and they immediately jumped on it.

Remember, the drive I told the story about is in, is in the lower right. That's the one where it was a 256 megabyte drive which spin right rejected and that's how we came across stumbled on. This whole problem was that spin right was checking the lab, the very end of the drive, just to make sure that its own drivers were working correctly with this drive and it was being rejected. And the guy milk you has you know God knows how many USB drives, but this was the only one that was having a problem. So we drilled into the problem and discovered that my belief is because it was so old and it's only a two to one ratio between good and good and bad size. It only actually has exactly 128 Meg rather than 256 Meg, and yes, I'm saying megabytes, not gigabytes. That's how old what is old this drive is.

So I don't think it would be who have any cheater, you know, and any fakery to only cut the drive in half. Typically, you see, like two terabyte drives that only have 32 gig, because that's enough to hold the file allocation table at the front of the drive, to make the drive look valid when in fact it isn't. You know, it'll store only 32 gig and if you try to use more than that it's, the drive says, yeah, I'm storing it, no problem. Well, until you try to read it back. So, anyway, the little piece of freeware is working. It's being tested. I'll finish it up during this week and I'll have an announcement, I'm sure, while you're back away, leo.

0:13:20 - Leo Laporte

I'll do it. I kind of knew that you would do this, because I could see you already thinking about what you would have to do to make it work. Well, let's see. We'd have to fill, we'd have to write to every sector. Oh, we got to worry about maybe being fooled. You were already working on it before the show even ended. I knew you were going to do this. I'm so glad you did. Now, when do you think you'll be able to release it? A?

0:13:45 - Steve Gibson

couple of days from now, oh quick.

0:13:47 - Leo Laporte

This will be hugely valuable to people.

0:13:50 - Steve Gibson

Yeah, actually, some of the people who have been testing it said that they think it's going to be GRC's number one most download freeware. Now, to be fair, though, the DNS benchmark has more than eight and a half million downloads Wow, with 2,000 new downloads every single day. So it's going to be tough for a little valid drive to catch up to the DNS benchmark, but anyway, I'm glad I did it. I'll be back to spin right by the end of the week. We'll have a very useful piece of freeware that every Windows person will be able to play with.

I must have, and I did tweet these screenshots and there were some reactions from people saying, hey, what about if you're colorblind?

Because I'm using color in order to show valid storage, read errors, write errors and missing storage. I will have a monochrome option that will put different shape black and white characters in the cells in order to accommodate people who don't have full normal color discrimination ability. So, in any way, it is on its way, and so I'm glad I did it, and we'll have a new gizmo here shortly. And yes, leo, your intuition was correct.

0:15:24 - Leo Laporte

I'm just. I mean, this is right up your alley. I saw on GitHub there are a few people who've written C++ programs to do this, but who are they? And its command line and blah, blah, blah and they're destructive.

0:15:37 - Steve Gibson

They wipe out your data. Oh good Lord, You're able to run this on an existing drive.

0:15:42 - Leo Laporte

That's not good. So you read the data, save it and then write to it and then write it back.

0:15:50 - Steve Gibson

You are good, and then I read it again, and then I rewrite it and reread it to make sure that it got written back correctly.

0:15:56 - Leo Laporte

And I think probably the reason people don't do that is it must take some time to do that on a two terabyte drive or more.

0:16:02 - Steve Gibson

Yes, and in fact somebody commented they had one of those destructive things and I said well, and he said you know yours is taking maybe I think it was like twice as long. Well, twice as long is a few minutes, actually many times. It completes in 15 seconds. Wow, and in fact, what many people are?

oh, yeah, it's fast, it's fast, it's only a few seconds, and many people are noting that what they're seeing, even when they get a field of green, is the speed and the hesitation. When I first started using it myself on some of mine, I thought, wow, like it was like freezing. Well, what is it doing when it's freezing? So there's also little read and write lights that flicker back and forth while it's running as it's counting down the number of tests remaining, so you can really see, like what's going on. And so, even if your drives are good, it helps you spot problems because put in a high quality drive, it just zips along. Put in a cheesy Walmart drive and it's like I mean, it's like God, what is wrong?

0:17:15 - Leo Laporte

Well, that tells you something too. Wow, yeah. Yeah, that's going to be a goodie. Okay, so thank you, steve, from all of us, thank you very much.

0:17:25 - Steve Gibson

Well, I'm, it's going to be fun. So Google's topics is coming to Android apps near you, and maybe this is a failure of imagination, but it hadn't occurred to me that Google's topics system, which we've talked about now several times, might not only apply to websites. In retrospect, it's so obvious that Google would also be assigning topics to Android apps and that advertisers and apparently other apps would also be able to query the devices local topics API to obtain a few breadcrumbs of information about you know the person who's using the app. One of our listeners was kind enough to share a screen capture of what had just popped up on his Android phone under the headline new ads new ads, privacy features now available, and the screen reads Android now offers new privacy features that give you more choice over the ads. You see, this is very much like the text that the Chrome users got. Android notes topics of interest based on the app you've used recently. Also, apps you use can determine what you like. Later, apps could ask for this information to show you personalized ads. You can choose which topics and apps are used to show you ads. To measure the performance of an ad, limited types of data are shared between ads.

Okay, so we know I'm a fan of Google topics. I understand it and I've tried to carefully explain the way it works, which is admittedly somewhat convoluted and open to misunderstanding, because Google is trying to slice this thing very close. Google wants access to some limited information about the users of their Chrome web browser and now their Android phones. In an environment where users have become skittish about privacy and tracking and we all recognize the trade-offs right If websites insist that they receive some revenue more revenue when advertisers have some information about their visitors and advertisers are determined to obtain that information by any means possible, then topics is the cleanest trade-off compromise I can imagine. If eventually, once legislation catches up, topics replaces all other forms of tracking and information gathering about me, then I'm all for topics. That's a trade-off that makes sense. So I suppose I shouldn't feel any differently about topics being extended outside of the browser. If a user wants to use advertising supported smartphone apps, then I suppose the same logic applies there, right, and I should explain that I personally cannot and do not tolerate in-app advertising. If an app is something I wanna use, please allow me to send a few dollars your way to turn off its ads. I will do that happily. Otherwise, I don't care how great it is. Nothing is that great. I will delete any app whose advertisements I am unable to silence. But that's just me. What we see all around us pervasively is that advertising works and, leo, as you noted last week, even if I refuse to click on some advertisement, that the brand being advertised has been planted in my brain. That's out of my control, and the fact is we live in an advertisement supported world.

This podcast is underwritten by a few high quality enterprises that are willing to pay to make our listeners aware of their presence and offerings. That's all they ask. So Google is extending topics beyond Chrome and into the underlying Android platform. That only makes sense really in retrospect, as I said, but I'm certain that Google will also allow topics, as they do on Chrome, to be completely disabled if that's what its user chooses. So again, props to Google for that. I am 100% certain that before offering that full disablement option, they thoroughly, and not just once, tested what I often call the tyranny of the default. So they absolutely know that nearly 100% of Android phone users will never know nor bother to disable their Android devices local topics, feedback and they also know that by allowing their more knowledgeable Android users, like every listener of this podcast, the option to disable topics. By giving them the option to disable topics, they're retaining and comforting those listeners who would be upset by this local, albeit extremely mild, smartphone surveillance. And if ads in apps are inevitable, if they're supporting the apps that you're using, then you might as well get as relevant an ad as possible, if you gotta have one anyway. So, anyway, I thought that was interesting. It didn't just hadn't occurred to me that topics would be something that Android at large did more than just Chrome. But that only makes sense.

I've often bemoaned the problem researchers have with helping Apple to find their own platforms security shortcomings because the platform is so thoroughly and utterly locked down. But last week I was reminded that for the past four years, since 2019, this has not been strictly true. Last Wednesday's blog post from Apple's security research was titled 2024, that was just next year. 2024 Apple Security Research Device Program now accepting applications, and this window is one month. So jump if you're interested. We talked about this before, but I've been overlooking this true story of this truly marvelous exception to Apple's.

No one gets in stance in their security researches. Apple's security researches overview of this. They explain iPhone is the world's most secure consumer mobile device, which I would agree with that completely which can make it challenging for even skilled security researchers to get started or actually to get anywhere. They said we created the Apple Security Research Device Program to help new and experienced researchers accelerate their work with iOS, now accepting applications through October 31st 2023, apply below and then under. How it works, they remind us.

They said the Security Research Device SRD for short is a specially fused iPhone that allows you to perform iOS security research without having to bypass its security features. Shell Access is available and you can run any tools, choose your own entitlements and even customize the kernel. Using the SRD allows you to confidently report all your findings to Apple without the risk of losing access to the inner layers of iOS security. I guess that means that the phone won't suddenly lock you out, anyway, they said. Plus, any vulnerabilities that you discover with the SRD are automatically considered for Apple's security bounty, which you know has ranged up to $100,000 in some cases, then elsewhere. They elaborate this a bit. They said iPhone is the most secure consumer mobile device on the market, and the depth and breadth of sophisticated protections that defend users can make it very challenging to get started with iPhone security research.

The central feature of SRDP, which is the program, is the security research device right, the SRD, a specially built hardware variant of iPhone 14 Pro that's designed exclusively for security research, with tooling and options that allow researchers to configure or disable many advanced security protections of iOS that cannot be disabled on normal iPhone hardware in the hands of users. Among other features, researchers can use a security research device to install and boot custom kernels, run arbitrary code with any entitlements, including as platform and as root outside the sandbox, set non-volatile RAM variables, install and boot custom firmware for secure page table monitor and trusted execution monitor, which are new in iOS 17. And, they said, even when reported vulnerabilities are patched, the SRD makes it possible to continue security research on an updated device. All SRD participants are encouraged to ask questions and exchange detailed feedback with Apple security engineers and in another place, explaining about eligibility for the program and some constraints. They said the SRD is intended for use in a controlled setting for security research only If your application is approved that is, your application to join the program. We said Apple will provide you an SRD as a 12 month renewable loan. During this time, the device remains the property of Apple, so you know you don't have to buy it. They're saying here, but it's still ours.

The SRD is not meant for personal use or daily carry and must remain on the premises of program participants at all times. Access to and use of the SRD must be limited to people authorized by Apple. If you use the SRD to find, test, validate, verify or confirm a vulnerability, you must promptly report it to us and if the bug is in third party code, to the appropriate third party. Our ultimate goal is to protect users. So if you find a vulnerability without using the SRD for any aspect of your work, we'd still like to receive your report. We review all research that's submitted to us and consider all eligible reports for rewards through the Apple security bounty.

Participation in the security research device program is subject to review of your application. To be eligible for the security research device program, you must have a proven track record of success in finding security issues on Apple platforms or other modern operating systems and platforms. So you know, have some pedigree. Be based in an eligible country or region and there's a last risk on that we'll get to in a minute. Be the legal age of majority in the jurisdiction of which you reside they said the 18 years of age in many countries and not be employed by Apple currently or in the past 12 months.

To enroll as a company, university or other type of organization, you must be authorized to act on your organization's behalf. Additional users must be approved by Apple in advance and will need to be individually accept. Will need to individually accept the program terms. Now, where they said be based in an eligible country or region asterisk. I looked at the bottom of the page where the asterisk was referring and there was a very long list of qualifying countries notably absent, and they were alphabetized so it was easy to spot were China, russia and North Korea. Yeah, that's a good.

0:31:03 - Leo Laporte

Greek to be in.

0:31:04 - Steve Gibson

So sorry there, Vladimir, you and your minions will not be authorized. You may have some underhanded, surreptitious way of getting your hand on a phone. You know, in Leo, I was thinking I wouldn't be at all surprised if these things are not geo-locked also. That is I'll bet there's some tethering on this thing, so yeah, apple's had to do this stuff very well, believe me.

0:31:34 - Leo Laporte

Yeah, yeah, this is good, this is great that they're doing this. I presume that one of the things is because this is always the complaint of researchers they couldn't get into these phones to know whether they were compromised or not. I mean, that complaint continues because a normal phone you still can't get into to know if it's compromised or not. But at least they can research zero days and so forth.

0:31:57 - Steve Gibson

Yeah, I'm sure it reflects many prior years of researchers complaining about exactly that right that they just like. Well, we'd like to help Apple. There's all this cool tech in there and oh, by the way, it does seem to be having lots of problems with zero days. Maybe we could find some of those, but we can't get in. So anyway, I wanted to correct the record of my recent statements that it just wasn't possible to conduct meaningful research into iPhone security. Bravo Apple. Once again, I think they're doing the right thing.

0:32:35 - Leo Laporte

Is there been any reaction from the security researchers on this, Like is this what they want? Is it enough? Oh, yeah, yeah, yeah, yeah, yeah.

0:32:42 - Steve Gibson

It is, you know. There I mean. The problem is Apple said that there's a limited number of these that they wanna have floating around. You know they're not gonna be able to honor every request, but in some of the text I noted that even universities, like security education programs, could qualify, so students in universities could have access to these special you know iPhone I'm sure Matthew Green is applying right now, you know.

0:33:17 - Leo Laporte

That's great yeah, and they do really good work because they're not constrained by commercial necessity, so they can spend months trying to, you know, break into this stuff. It's almost always out of universities the toughest hacks come like All the research that we talk about.

0:33:33 - Steve Gibson

as a matter of fact, we've got them right here, oh yeah, this is on the need to really, in all bold caps, trust every web browser extension we install. This sobering research has recently come from researchers at what do you know? The University of Wisconsin Madison. As part of their exploration into what a malicious web extension can and might do even today, when operating under the more restrictive, manifest V3 protocol that Chrome introduced, which has since been adopted by most browsers, these researchers discovered that their proof of concept extension is able to steal plain text passwords from a website's HTML source. And wait till you hear which websites were vulnerable, found to be vulnerable, thanks to the unrestricted access to the DOM tree. That's the web pages document object model, which is organized logically as a tree structure. It describes the document, but more recently, I mean everything that you see on the page is in this document object model. It's just like it is the web page containing.

0:34:58 - Leo Laporte

You don't see on the page. More importantly, right, it didn't feel yes, yes, yes.

0:35:03 - Steve Gibson

Yes, so that's what the browser uses to drive its renderer and all of the scripting that it also runs. So they demonstrated that the course-grained permission model under which we're all now operating, which also covers browser's text input fields, violates the principles of least privilege. They found that numerous websites with millions of visitors and I'm just I'm not gonna stomp on the news of which websites, we'll get there in a minute but boy, including some Google and Cloudflare portals store passwords in plain text within the HTML source of their web pages just sloppiness Thus allowing for their ready retrieval by extensions. So their research paper is titled Exposing and Addressing Security Vulnerabilities in Browser Text Input Fields. This is what they explained in their paper's abstract. They said in this work we perform a comprehensive analysis of the security of input text fields in web browsers. We find that browser's course-grained permission model violates two security design principles least privilege and complete mediation. We further uncover two vulnerabilities in input fields, including the alarming discovery of passwords in plain text within the HTML source code of web pages. To demonstrate the real-world impact of these vulnerabilities, we design a proof of concept extension leveraging techniques from static and dynamic code injection attacks to bypass the web store review process. In other words, they snuck it in. Our measurements and case studies reveal that these vulnerabilities are prevalent across various websites with sensitive user information, such as passwords, but not restricted to we're talking social security numbers, credit card numbers, you name it Exposed in the HTML source code of even high traffic sites like Google and Cloudflare. We find that a significant percentage 12.5% of extensions possess the necessary permissions to exploit these vulnerabilities and identify 190 extensions that directly access password fields. Finally, we propose two countermeasures to address these risks a bolt-on JavaScript package for immediate adoption by website developers, allowing them to protect their sensitive input fields, and a browser-level solution that alerts users when an extension accesses sensitive input fields. Our research highlights the urgent need for improved security measures to protect sensitive user information online.

Okay now. The Manifest V3 protocol prohibits extensions from fetching code hosted remotely that could help evade detection and prevents the use of eval statements that lead to arbitrary code execution. However, as the researchers explained, manifest V3 does not introduce a security boundary between extensions and webpages, so the problem with content scripts remains. To test Google's Web Store review process, they created a Chrome extension capable of password-grabbing attacks and then uploaded it to the extension's repository. Their extension posed as a GPT-based assistant that can capture the HTML source code when the user attempts to log in on a page by means of a regex.

Abuse CSS selectors. You know the web page CSS to select target input fields and extract user inputs using the dot-value function and perform element substitution to replace JavaScript-based obfuscated fields with unsafe password fields, all of which they explain in their research doc. The extension does not contain obvious malicious code, so it evades static detection and does not fetch code from external sources, which, of course, would be dynamic injection. So it is fully manifest V3 compliant. This resulted in the extension passing the review being accepted on Chrome's Web Store, so the security checks failed to catch the potential threat, which in this case, was very real. Now, of course, the researchers followed strict ethical standards to ensure no actual data was collected or misused. They deactivated the data receiving server component while only keeping the element targeting server active. Also, the extension was set to unpublished at all times so that it would not gather many downloads, and it was promptly removed from the store following its approval. That is, as soon as they saw that it got in and were able to verify that this thing, you know, was able to slip by.

Okay, subsequent measurements showed that, from the top 10,000 websites, roughly 1,100, that's where the 12.5% figure came from are storing user passwords in plain text form within the HTML document object model and extensions script have access to the document object model, thus access to plain text passwords. So this is a fundamentally insecure design. The designers of those 1,100 websites that is, the 1,100 out of the top 10,000 that these guys looked at the designers of those websites either wrongly assume that the contents of their pages document object model are inaccessible, which is not true, or they never stop to consider it. In addition, another 7,300 websites from that same set of the top 10,000 were found vulnerable to DOM API access and direct extraction of the user's input values. Several of those, including widely used ad blockers and shopping apps, boast millions of installations. So this thing is widespread. Okay, now is everybody sitting down? Huh, notable websites lacked the required protection and are thus vulnerable right now. Those include Gmailcom, whoops, which have.

0:42:54 - Leo Laporte

Nobody uses that.

0:42:55 - Steve Gibson

Thank goodness which has plain text passwords stored in html source code. What cloud flare, cloud flarecom? Plain text passwords in html source code passwords.

Facebook what the users passwords are available in plain text in the html source. Facebook comm user inputs can be extracted via the DOM API. City bank comm user inputs can be extracted via the DOM API. Irsgov Social security numbers are visible in plain text form on the web page source code. Capital one dot com social security numbers are visible in plain text form on the web page source code usenixorg same thing social security numbers. Amazoncom credit card details, including the security code and zip code, are visible in plain text form on the page of source code available to all extensions.

Yes, it is that bad, holy cow. The version 3 manifest was a trade-off Due to the way the industry's existing websites and popular extensions had been coded. Further limiting extension use would have broken too much existing code, so it wasn't done. When a google spokesperson was asked about this, they confirmed that they're looking into the matter. You think, and pointed to chrome's extension security FAQ, that that does not consider access to password fields to be a security problem. Quote as long as the relevant permissions Are properly obtained. Unquote Right, let's hope this gets fixed soon.

0:44:58 - Leo Laporte

I can understand, though, why you need access to the DOM if you're an extension. I mean, that's Kind of what an extension does and Gore Hill has complained about manifest 3 making it impossible to do you block origin Because even the little restrictions that it offers Making hard to do ad blocking.

0:45:17 - Steve Gibson

So I understand, boy, this is a cat, it is a catch 22. Yeah, yes.

0:45:23 - Leo Laporte

The web was really not designed to be secure. I mean, that's what we're no fundamental.

0:45:29 - Steve Gibson

And we we've tried to turn web web into web apps as if they're the same, and you know we're. We've stretched our browsers Mightily in order to do that. In the takeaways, section 5.3 of their paper, they write this is a systemic issue. They said. Our measurement studies on the top 10,000 websites show that we could extract passwords from all the login pages with passwords. The widespread presence of these vulnerabilities indicates a systemic issue in the design and implementation of password fields, and they talk specifically about password managers. Now think about that. We take it for granted, right, but any and all password managers Must, by design, be a third-party extension which has direct access to any websites. Password fields, they said, under role of password managers.

The widespread use of password managers may partially explain the prevalence of vulnerabilities when password values are obscured but can be accessed via javascript. These tools enhance the user's experience by automating the process of entering passwords, storing the encrypted passwords and later auto filling these fields when required. This functionality Reduces the cognitive load on users and encourages the use of complex, unique passwords for each site, thereby enhancing overall security. However, for password managers to function effectively, they require access to password fields via javascript. This necessity creates an inherent security vulnerability. While the password fields may appear obscured to users, any javascript code running on the page, including potentially malicious scripts, can access these fields and read their values. This interaction between password managers and these vulnerabilities presents a tradeoff between usability and security. While password managers improve usability and promote better password practices, their operation Necessitates javascript access to password fields. That inherently creates a security risk. Essentially, you know, these guys just demonstrated that you don't even have to be a password manager to obtain password manager level access, and they were able to successfully sneak their universal password password extraction extension code past google's incoming filters without any trouble. So their 26 page paper Is marvelously clear and none of this stuff is fancy or complex. Its content would be entirely accessible to anyone familiar with modern web page construction and operation. Any of our listeners who are responsible for the design of their organizations secret accepting web pages might well benefit from making sure their own sites are protected.

I've included the link to the research pdf for anyone who's interested and to improve its availability. It's also this week's g rc shortcut, so it's you can find it at grcsc 938 grcsc 938, and the pdf link is also in the show notes. So this is important. Again, kind of in retrospect this like well Duh. Of course password managers need to be able to do this. It turns out it's not just password managers that can. They found 190 Existing extensions that had this capability, and I don't think there's 190 password managers out there, so a lot of apps are doing it, and they created one and slipped it past google. That could do it too, wow.

0:49:53 - Leo Laporte

Yeah, amazing.

0:49:56 - Steve Gibson

So, leo, yes, we're going to see what thoughts and observations our listeners have to contribute after Taking our next.

0:50:02 - Leo Laporte

We enjoy that part of the show, the feedback section. Good stuff, good stuff, yeah, yeah. But before we do that, can I mention Something called panoptica? Uh, you know that security is job one here at security now, and in this rapidly evolving landscape of cloud security, you will be glad to know about Cisco panoptica. It's at the forefront, revolutionizing the way you manage your micro services and your workloads with a unified, simplified approach to managing the security of cloud native applications over their entire life cycle.

Panoptica Simplifies cloud native security by reducing tools, vendors and complexity. By meticulously evaluating your app for security threats and vulnerabilities, panoptica ensures your applications remain secure and resilient. Panoptica detects security vulnerabilities on the go in development, in testing and production environments, including any exploits in open source software. It also protects against known vulnerabilities in container images, configuration drift, all while providing runtime policy based remediation. Cisco's comprehensive cloud application security solution, panoptica, ensures seamless scalability across clusters and multi cloud environments. It offers a unified view through a simplified dashboard experience, reducing operational complexity and fostering collaboration among developers, sre's and sec ops teams. Take charge of your cloud security and address security issues across your application stack faster and with precision. Embrace panoptica as your trusted partner in securing apis, serverless functions, containers, kubernetes environments, you can transform the way you protect your valuable assets. Learn more about panoptica at panoptica dot app. Panoptica dot app. Let me thank panoptica so much for supporting the work we're doing here with mr MrG.

0:52:10 - Steve Gibson

And I love the term Configuration drift. Yeah, I, you know that. I had coined that one.

0:52:17 - Leo Laporte

Yeah, that was a great term.

0:52:19 - Steve Gibson

Yeah, Well, you could see you know.

0:52:21 - Leo Laporte

This is what a great idea so that you, you know, you let panoptica just keep an eye on as you import these libraries, as you build, to make sure You're not introducing problems is brilliant. It's just the way it should go. All right, let's close the loop.

0:52:36 - Steve Gibson

Yeah, peter gowdy tweeted. He said uh, hi, steve, I took note of your global privacy control episode and just added the privacy badger extension to vivaldi. There doesn't seem to be a solution for mobile that I could find, even in firefox mobile. Is there a mobile global privacy control solution that you know of? And I'm not surprised that support is still lagging, since, as we know, change always comes Much more slowly than we expect or hope or wish. Um, but I'm pretty sure that once additional legislation appears and we know that it's it exists in three states beginning with a letter c, uh, and it is spreading both, so both us will be here in the us and also in europe. Uh, I think we can assume that the global privacy control switch will become a universal feature of browsers. Again, it'll just take some time, but we'll get there. Uh, long-standing friend of the show, alex kneehouse. Uh, he said he's tweeted hi, regarding microsoft's, doesn't care about the sts issue, remember that's the, the, the secure time setting, whatever it was that that stood for what we've talked about several times, you know, using time in the tls handshakes in order to set the clocks. Um, he said. Despite it being known for decades as being unreliable. He said this is alex saying.

I don't think they're deliberately or maliciously Misengineered the feature. I think they just didn't do the research. Most people think that microsoft developers are first rate, but management there has reduced costs, which is encouraged use of offshore and lower experienced engineers. Unlike us boomers, devs today rarely go as deep as you did to understand the issue. The engineer was simply and probably impatient, saw the field in the hello message and went for it. You're most likely correct that they don't want to admit that. To admit they're wrong because it raises the question I'm posing here about their engineering prowess. So it was most likely a combo of poor engineering and design Coupled with hubris today that prevents them from recognizing the deeper issue.

0:55:04 - Leo Laporte

I also want to point out that you know who alex kneehouse is. Right, yeah, he was a guy who he was the first club member bought the first ads on this show With a star and we thank him so much because he has put us on the map. Thanks to alex.

0:55:21 - Steve Gibson

Well, you put us on the map, but alex helped do it and I don't disagree with anything. Alex wrote.

Everyone yes, everyone here knows how infinitely tolerant I am of mistakes. You know they happen and anyone can make them, and I'm sure one of these days I'll make a big one, and so I'll be glad that I've always been saying this. You know there are many. It's defensive, I understand. I, you know, I'm crossing my fingers, I've I'm as careful as I can be, but you know, uh, there are many.

You know many adages that begin with if you're not making mistakes, dot, dot, dot, you know. And typical endings for that are then you're not trying hard enough or then you're not making decisions, you know. But the most famous one appears to be if you're not making mistakes, then you're not doing anything. You know. The point of all of these is the clear acknowledgement that mistakes are a natural and unavoidable consequence of our interaction with the world. You know, you do something, the feedback from what you did, which was presumably not what you expected, it, informs you that a mistake was made somewhere. So, with the benefit of the new information, you correct the mistake.

My entire problem with Microsoft is that we see example after example this being just the latest, where this feedback system appears to be completely absent, whether it's well-meaning security researchers informing Microsoft of serious problems They've found, or their high-end enterprise customers for seven years, telling them hey, my windows server clocks are getting messed up and it's really screwing up everything. Microsoft no longer appears to care. And to alex's point, you know, though, coming at this from a different angle, I think this all boils down to simple economics. Caring costs money, and Microsoft no longer needs to care because not caring costs them nothing. That's really the crux of it.

Today, there's no longer any sign of ethics for ethics sake. You know that's long gone. It's simply about profit. We're all aware of the expression Don't fix it if it's not broken. Microsoft has extended this to don't fix it even if it is broken, and you know what we get is a system that you know it mostly works. It could be better, but I guess you know it's good enough, and you know, again, I always want to add the caveat I'm sitting in front of windows, I love my windows. I don't ever want to have it taken away from me. So you know, I want it to be as good as it can be, but darn it.

0:58:11 - Leo Laporte

It could be better. I'm coming over and I'm taking it away from you.

0:58:16 - Steve Gibson

No more A listener, michael. He tweeted Dear Steve, just listen to another awesome security now from you. I have a question about virus total. If I'm not bugging you, what's the probability that it could have false positives? I'm asking specifically because of a program I've used since windows 7 called win arrow tweaker, which lets me customize windows so that it's more usable and easier. It's not flagged by windows defender, nor by malware bytes. I guess what I'm asking is in your opinion, is win arrow tweaker okay to use and Is virus total ever wrong? Thank you, michael. Okay, so If we were to use majority voting, then I have never seen virus total make a mistake. But if you require zero Detections In order to be comfortable you know out of the 66 different av scanners that virus total polls Then that's actually somewhat rare.

When using any modern av scanner, it's important to understand Today's context. The original av scanners operated by spotting specific code that had been previously identified as being part of a piece of specific known malware. This was quite effective and rarely generated false positive alarms, because the av was actually finding the malware that used you know some specific code. But then, of course, malware evolved to avoid direct code recognition, you know, by scrambling itself, encrypting itself, compress, compressing itself and even becoming polymorphic. Remember the polymorphic viruses which self Rearranged in order to appear as unique as possible from one instance of itself to another. Then later, as a consequence of this back and forth cat a mouse game that malware was playing with av scanners, the scanners began looking at the operating system functions that a program Was using and judging whether some things a program Like the the os api calls a program might request Fall outside of some arbitrary norm. For example, uh, maybe a dns lookup. There's nothing malicious about a program doing a dns lookup, but most programs that want to connect to a remote resource Just issue an https request and the operating system performs the dns lookup itself to obtain the connections ip address. So in this example, any program that wanders away from an arbitrary, tightly defined norm might trigger a false positive alert, not because it did anything wrong, but simply because it was found to be doing things that someone judged as unusual.

The final outcome of decades of this back and forth contest between av and malware Is the increasing use of a specific program's reputation. The way things have turned out, reputation is the ultimate source of trust. So in that sense, things are the same in cyberspace as they are in the real world. You know, we trust people who have earned a reputation. We have the ability to easily obtain unspoofable cryptographic signatures of specific code. This means that, for all intents and purposes, it's impossible to change the code in any deliberate way Without also changing the codes resulting cryptographic signature, so without actually knowing anything about a program.

The persistent connectivity provided by the internet allows a program's use and its signature to be tracked over time. If a program is out and about For a few months without anyone complaining or it causing anyone any trouble, then that code, as identified by its unique signature, will have established a good reputation and will therefore become trusted. The trouble is that any newly created code will have an unknown signature that won't have had any chance to earn a reputation and as a creator of new utilities, this is a problem I run into all the time. Two days ago, last Sunday, the first people to download and you know the completely harmless and freshly assembled Valid drive windows application had windows 10, immediately Quarantining the download, complaining that it was not commonly downloaded. Well, yeah, no kidding's brand it never. It had never been downloaded before.

So you know, here we had this brand new, never before seen cryptographic signature and windows said whoa, what's this where this come from? You know off with its head. So to make matters worse, you know, actually part of it was my fault. I was in a hurry to get the code into everyone's hands so I hadn't stopped to digitally sign the executable file with GRC's code signing certificate. You know, as soon as the first several complaints came in, I did that and now things to have appeared to have calmed down, since GRC has a spotless reputation, since we've never had an incident of any kind. But even so, code signing certificates do get stolen. You know mine are all locked up in hardware security modules, so you know at least they can't be stolen easily. You know you have to have physical Approximity and that's unlikely to happen because you know level three is behind multiple barriers and Guards and cameras and alarms and everything. So anyway, um, but the point is, in general certificates do get stolen. So just being signed by someone, even with a perfect reputation, isn't 100 percent assurance. Yeah, I did just check valid drive with virus total and there were three false positive quote detections, unquote, after querying 66 antiviral systems cyber reason, silence and trap. Mine Didn't like it, but no one else had any complaints. So, as I said, the majority voting. I've I've never seen any of my stuff, you know, objected to by more than a handful, and you know so. But but anyway, so it certainly can happen.

As for arrow tweaker, I just grabbed a copy Of the setup executable from win arrowcom, which is the the publisher, and I dropped it on to virus total and I received a 100 percent clean bill of health with virus total saying that zero out of 43 scanners Found it to be suspicious. Now I noted, however, that the executable program, the setup program, was not signed, which would make me suspicious and uncomfortable, since it's almost becoming required these days. A digital signature on an executable, on executable content, is something I always check for now and mean us to say I I always and only Obtain such programs, especially if they're unsigned from the original website source. You don't want to get it from somebody who's like oh yeah, we also offer it for download, but for what it's worth, uh, that it's version 1.55, which I just downloaded directly from their site. Uh, other than it being not signed, it looks fine. I did note that it was published in June, so if Michael had grabbed it shortly after its publication, also not being signed, then he could have found that virus, total or whatever hadn't had a chance yet to you know, to get to know it, to have to other people upload it and say what do you think? Scan this for me, uh, at this point it looks like it's fine, but anyway, the point is, can it false positive? Yes, unfortunately happens to me all the time. You ought to see that anything that's been around for a few months will have acquired a reputation and that then that reputation now is really the only shield, uh, that a program has. So, um, that's that, that's the current status of AV scanning. Uh, rick said Steve, uh, on acceptable ads and you block origin. How are you doing it? I looked around but only found this old thread. He provided a link. At his tweet he said while it's true that the list it points to his current, gore Hill himself slammed it, though what he's saying about that particular list doesn't seem to apply anymore. Okay, so I did some digging and refreshing of my memory and it turns out that I was wrong about you block origin and acceptable ads. We discussed all of this after 2014, when it was all happening, but I'd forgotten the details.

You block was initially developed by a guy named Raymond Hill, better known by his handle of Gore Hill, and it was released in June of 2014. The extension relied upon community maintained block lists, while also adding some extra features. Not long after that, raymond transferred the project's official repository to a guy named Chris Aljudi, since he was frustrated with all of the incoming requests. That's sort of not Gore Hill style. You know. Curmudgeon is, you know. We remember him sort of fondly, along with John Dvorak.

It turns out that Chris, the guy who obtained the repository, was somewhat less than honest and respectful. He immediately removed all credit from Raymond Hill, portraying himself as you blocks original developer, and he started accepting donations, showcasing, showcasing overblown expenses to turn the project into a profit center. Rather than development, chris was focused more on the business and advertisement side, wanting to milk you block for all it was worth. Consequently, gore Hill decided to simply continue working on his extension, but that unfortunately resulted in a naming collision where Chrome saw Chris's you block as being the original and Gore Hill's as being the interloper. So Gore Hill lost and Chrome yanked his from the extension repository. Thus was born you block origin.

And here comes the difference that matters. The original you block worked with the acceptable ads policy and still does, but Gore Hill being. Gore Hill wasn't interested in making any exceptions to his extensions ad blocking, especially when exceptions to the acceptable ads policy had the reputation of being available to the highest bidder. That's not his style at all. Having watched all this drama unfold at the time, we all went with the original extensions original author, since no one felt any particular sympathy for Chris, whose conduct did not appear to be very honorable. And choosing you block origin also meant no longer being able to allow acceptable ads, which I would otherwise have no problem doing. So that's the story. I am still disinclined to move away from you block origin, since I have the strong sense that, curmudgeonly, raymond Hill will always have our backs. I feel much less sure of that from Chris Aljudi, who is the guy behind you block, based on his conduct after getting it. So anyway, rick, you're right, I was wrong about acceptable ads. So I'm glad you brought it up and I was happy to have you. I'm glad you brought it up and I was able to correct the record.

Somebody whose Twitter handle is love thy neighbor. He said Steve, can the read speed utility analyze a drive connected via a USB port. It appears that I can only see drives connected and enumerated on the internal IDE, sata or SCSI buses of the computer. Is there a way to have read speed analyze a USB connected drive? I faithfully listen to security now so hope to hear our response there, as I am not on Elon's repugnant X site. Very often it's been right Users since version one and listener to security now since episode one. Thanks for all you do. Okay, love thy neighbor. Unfortunately the short answer is no.

The read speed DOS utility was a natural offshoot of the early work on spin right Six one. Specifically, I believed that I had nailed down the operation of what would become six ones, new native IDE, ata and AHCI drivers with parallel and serial ATA drives, and we had discovered the surprising slow performance at the highly used front end, the front end of many SSD devices. Since I thought that read speed might be broadly useful, it was spun out sort of as an offshoot along the way. So it won't be go. It won't be until we get to spin right seven that USB and NVMe devices will be added to that collection. That said, I do expect to be dropping some similar freeware in the early days of spin right sevens development, since I'll be anxious to get feedback about this emerging softwares. You know, spin right sevens dual booting over BIOS and UEFI and, as we know, I'll be writing it under a new, that R toss 32 operating system. So at that point we will finally be able to talk natively to all drives, which will be, you know, the real benefit for going forward for seven and beyond.

Austin Wise. He tweeted regarding man on the middle attacks and HTTPS on security now 937. Boy and I'm going to be a long time, leo, before I am able to live down my, my, my, my comment that I don't think there's anything that wrong with HTTP. There's still some places for it. I don't think any of our listeners agree.

1:14:32 - Leo Laporte

Well, you know, Dave Weiner, who is the father of RSS, wrote a very nice piece on his scripting blog that said this HTTP. It was critical to the development of the web because it's easy to implement and it should still be used in cases where it's safe to use and there are plenty and there's a lot to be said for simplicity, especially so that anybody can create a site.

1:15:02 - Steve Gibson

Yeah, in fact, somewhere I just saw I don't know where it was, but it was talking about the nature of security and it said keep it simple, keep it simple, keep it simple. I mean, and we know how many times we talked about complexity being the enemy of security.

1:15:18 - Leo Laporte

It's not hard to generate a let's encrypt certificate and make your site HTTPS. I've done it on a number of places, but still there are places where there's no login, there's no passwords in the DOM there's no reason that you should worry about a man in the middle and I think those sites should be allowed to continue HTTP. But Google doesn't, that's for sure.

1:15:45 - Steve Gibson

Well, leo, you just raised a perfect example. You could have TLS 1.3 with the fancy longest bit you know encryption key. And if you've got a funky extension in your web browser, doesn't matter. If it's HTTPS, it's still sucking all your passwords and social security numbers and credit card details right off to wherever Holy cow Lower Mongolia. I think, actually lower Mongolia, I think they're able to get iPhones, but not Russia and China.

1:16:15 - Leo Laporte

Good yeah for all the security researchers there.

1:16:19 - Steve Gibson

We have. We have a listener from Google. He said if an attacker is on a local network like a coffee shop Wi-Fi, they might not need a privileged position, which, of course, was what I was talking about last week to modify traffic C ARP spoofing, right yeah.

1:16:39 - Leo Laporte

Right and the widespread availability of things like the Wi-Fi pineapple make that something even Not so sophisticated people can do so.

1:16:48 - Steve Gibson

Yes, he said also the integrity features of TLS are useful. Even if you trust the network, random bit flips and packets like from a misbehaving router will be detected and cause the connection to terminate. That's a good point. This prevents downloading a corrupted data. That's true. Although you know, the underlying protocols do have checksums which also catch that and that's why retransmissions are happening often. And he said, and regarding Leo's mention of companies using HTTP services for internal sites, this is true of Google, which pervasively uses sites like HTTP colon slash, slash, go, slash no dot in it, just G, o slash for short links, and HTTP colon slash, slash B, just the letter B slash for bugs and more. But we have a proxy auto config file in our browsers that make sure all such services are sent over an HTTPS proxy to prevent man in the middle attacks. All that said, I hope browser, he wrote. I hope browsers continue to support HTTP for years to come. It is such a versatile protocol. There you go. It would be a shame to lose it completely. There you go. Love the show Austin.

1:18:06 - Leo Laporte

That's sensible. That's a sensible answer, yep.

1:18:10 - Steve Gibson

Austin sounds like a Google engineer and he, you know, makes some great points. Way back in the early days of this podcast we spent a good deal of time Leo, you'll remember Fodley exploring the details of low level attacks and attacks such as ARP spoofing. For those who don't know, arp stands for the address resolution protocol. It's the protocol glue that links the 48 bit physical Ethernet MAC addresses of everyone's Ethernet hardware to the 32 bit logical Internet protocol addresses IP addresses which the Internet uses. When Internet protocol data needs to go to someone, it's addressed to them by their IP address but sent to their Ethernet MAC address. The ARP table provides the mapping between the lookup between their current IP address and their device's physical Ethernet MAC address, and it's the ARP protocol that's used to populate and maintain all ARP tables that are on the local Ethernet network.

So here's the point that Austin was making If someone can arrange to interfere with the proper operation of this address resolution protocol, it's possible to confuse the data in the network's ARP tables to misdirect and redirect traffic to the wrong Ethernet network. An ARP spoofing is able to cause exactly such misdirection. So Austin is 100% correct that in an open Wi-Fi setting it would be possible for an attacker to arrange to intercept traffic by, for example, causing clients of a router to believe that the attacker's MAC address was the address of the network's gateway, and thus they would send all their traffic there instead of to the router. And you know, indeed, the use of TLS and HTTPS would completely prevent any such attacks. So he's well, actually it would prevent their success. The traffic would still get routed to him, but he couldn't do anything with it because it would be encrypted and he wouldn't have the ability to intercept it. If he, I mean, it would be much more complex to do so If he'd have to get a certificate that was trusted into the, into the victim's browser somehow, and then perform a full proxying of HTTPS and TLS. So again, I think we've pretty much established where things stand. Https definitely the way to be secure, but it's not enough because you could have a hinky browser extension and still be in trouble. But definitely better than not. Yet HTTP still useful. Jedi Hagrid tweeted.

My IT director at work suggested I message you. I found a sequel file containing user and employee information on a website, as well as social media secure tokens. I've tried calling the company. I signed up for LinkedIn premium for the free month in order to message the COO, and I've tried telling Brian Krebs Maybe I'm thinking too much into this, maybe it's not that big a deal. You're the last person I'm going to notify and if nothing happens that I guess nothing happens.

The file is still on their server and you can see it here. And he did this was a DM, so it was a private connection between the two of us. He sent me the link. I've redacted the domain name in the show notes and he said the file is and again, a redacted the name dot SQL. You can search it using notepad plus.

Plus. There are dot gov customer emails, people who are applying for jobs, addresses, everything in plain text. I'm not sure what else to do. Okay now, as I said, since the URL he provided, which was not redacted, was definitely a going national concern, I suggested that he shoot a report off to CISA. We have a web facility for receiving reports of things like this that people find at HTTPS colon slash, slash, wwwcisagov slash report, and they also accept email directly at report at CISAgov. So for Jedi Hagrid and for all of our listeners, I thought that was useful to share, that you can just send email to report at CISAgov, if you stumble across something that you think that you know is big enough and worthy of coming to our our you know cybersecurity, information security agencies attention, and they'll certainly know how to contact the right people and get their attention.

1:23:34 - Leo Laporte

Having said that, though, he may have just found a you know a paste bin with personal information, and that's part of a hacker site, you know? I mean, this stuff must be all over right.

1:23:44 - Steve Gibson

No, it was on their site.

1:23:47 - Leo Laporte

It's on the site of the company.

1:23:50 - Steve Gibson

It's an active running open SQL file that they left exposed oh yes, that's yes, and the company didn't want to hear about it.

1:23:59 - Leo Laporte

That's a you know.

1:24:01 - Steve Gibson

the receptionist said what file? I don't know what you're talking about.

1:24:04 - Leo Laporte

Yeah, we get. There is a scam. We get fairly frequently emails from people say I've found it a issue on your website. I would like a bug bounty. Please don't ignore this, because it's a hazard. And usually they say something that shows that this is just a generic email, things like you know the logins on your website or something yeah, compromise so it. You know companies get this stuff all the time and it's a scam. It's a known scam, so right, maybe that's why they're ignoring it.

1:24:34 - Steve Gibson

Yeah, in this case they shouldn't, because it's actually an actual live it's a. Sql file on their. You know, and this layoff, I told you the name of the company you'd be like what? Oh, okay.

1:24:45 - Leo Laporte

Got it Okay, yeah, I hope CISA pays attention, that's somebody will pay attention.

1:24:52 - Steve Gibson

If they get this email, they'll be the CEO will be getting a call from the government. So anyway, two last things, quickie. Simon Croft, oh eight, said. Hi, steve, on SN 396. You talked about multi-level cells in SSD storage. This is also a concern with USB thumb drives and SD cards. When used in industrial applications, sd media may be storing programs controlling machines where errors cannot be tolerated, industrial environments will have vultures, spikes and transients which can flip bits. Consequently, vendors are now selling specifically SLC, single level cell storage SD cards. For this market. The capabilities of the capacities are much smaller because of this, typically around two gigabyte, but that's plenty for most controllers. Cheers, Simon PS.

The heuristics story was an ouch. Glad, I'm not glad I'm now retired from CIS admin life. So anyway, I thought this was an interesting angle. The inherently lower reliability of multi-level cell storage is well understood and in environments where endurance and reliability Trump's maximum storage density SLC has a much better chance of remaining solid. And finally, martin Biggs brings us the duh.

Why didn't I think of that head smacker of the week Martin writes Hi, steve, I'm listening to this week's episode, and that was last week, episode 937 of Security. Now You've just described that you can get VirusTotal to check a file before you download it. The problem with this, though, is that if a malicious site recognizes that VirusTotal is downloading the file, then the site can serve VirusTotal a safe version. Then, once you're secure in the knowledge that VirusTotal says the file is safe, the malicious site can happily serve you the malicious file. As I cannot find a way of downloading the file directly from VirusTotal, that is, once they've received it, he says, I think the better option is to download the file onto your computer then upload it to VirusTotal yourself for checking. This way you can be certain that it is checking the same file that you have.

Thank you for the podcast and I'm glad that you've decided to continue past those dreaded three nines. Regards Martin, as I said, that's a head smacker what a download query from VirusTotal looks like and whether they may have taken any precautions to mask their downloading. But Martin is 100% correct. As long as the possibility exists that VirusTotal would be receiving and checking a different file, then the user downloads. There is no choice but to get it first and provide it to VirusTotal. So nice catch, martin, and thank you and Leo, on that note, we'll take our last break and then look at Apple saying no and the exclusive club that they have now joined.

1:28:42 - Leo Laporte

You mean ClubTwit for just $7 a month, and I had three versions of all the shows.

1:28:47 - Steve Gibson

That's what I mean.

1:28:48 - Leo Laporte

Apple is a member of that right? No, it's not no, but you should be. If you're not a member, join ClubTwit. That's all I'll say.

Hey, here is the Thinks to Canary. You know, we've talked about this before. This is the Canary in your network's coal mine, if you will, a honeypot that is trivial to deploy and sends very high quality signals that something has gone wrong. And you want to know that, because most companies discover they've been breached long after the fact. I mean, look at the headlines, you know. Sony, it was what? Six months. Marriott, a Starwood group, it was three years.

This is the problem. When you guys get in, they get past your primitive fence, then they hide. They don't want you to know. They're there while they explore. And man do they explore? What are they looking for? Oh, all sorts of stuff. Valuable documents they can use against you. Data and information about employees and customers they can blackmail you with. And, of course, if they're a ransomware gang, they're looking for everywhere you back up your data to make sure they encrypt that too. You don't want these cockroaches. You don't want them in your network, trust me. But thank goodness we've got this.

With Canary, you can be anything you want to be. Each canary can be anything from a Windows IIS server to Windows server itself, to this one's a NAS. I set it up to be a Synology NAS and in every case it looks real. I mean totally real. They don't look vulnerable, they look valuable. The MAC address matches, the login screen's identical. There's nothing to let a bad guy know that he has found a tripwire. But he has, and when he accesses it you will be alerted. Canary tokens can also be created. Every canary device can create canary tokens, which are files, pdfs, stock files, spreadsheets, whatever you want them to be spread around your network. So every corner of your network can have a little tripwire waiting for the bad guy to show up. Three minutes of setup, no ongoing overhead. This thing sits quietly on our network and I have to say we have not one false positive from this. We did get one real alert which I acted on and removed the device immediately. This will let you know that attackers are in your network long before they dig in, usually in the first day, as soon as they start exploring. If you've put these in the right places. You know I've got some canary tokens called like payrollxls always a good one or social security numbers Don't be too obvious. Maybe just you know, I don't know employee directory even would probably be enough to get a bad guy to look at it right, and that's a reasonable file to have on one of your computers.

These canaries follow the thinks canary philosophy trivial to deploy, with ridiculously high signal quality. Every customer gets their own hosted management console. You use that to configure your canaries, to configure your settings, to manage your canaries, to handle events, because you can be notified by the console, but not just console email text messages, syslog. There's an API. You could write your own tool webhooks so it could use Slack. I mean it's really kind of unlimited. However you want to be notified, you will, but you won't get those false positives. You won't be bugged when you hear from your thinks canaries something's amiss, something's awry. If someone browsers a file share, opens a sensitive looking document, you're going to get an immediate alert. And it's rare to find a security product that doesn't inconvenience people, that people don't mind, and it's near impossible to find one that customers actually actively love. But that's this canary. Hardware like this VM based, cloud based Canaries are deployed and loved on all seven continents. If you don't believe me, go to canarytoolslove for emails and tweets full of unsolicited, verified customer love from some of the biggest CISOs, names you'll recognize for the thinks canary Next in one.

Let me give you the pricing Everything up front here. Go to canarytoolstwe and please do that. Let's say I mean a small operation like ours, a few handfuls enough. Big bank might be hundreds. Casino back end operation might be even more right. You want to put them in every corner. You want to set up the canary tokens so that the bad guy will immediately trip an alarm when they start rummaging around. You don't want to make it hard to find them, right. Let's say five. Okay, because you can create an unlimited number of tokens with those.

Put five hardware devices out there $7,500 a year. You get your own hosted console. You get upgrades, support, maintenance. It's free. If you sit on it, if you step on it, if it falls out of window, they'll send you another one right away. I'm going to get you an even better discount if you use the offer code TWIT in the how Did you Hear About Us? Box 10% off the price, and not just for the first year but forever. So that's a good discount. Offer code TWIT.

And while I think these are the best things since the original Honeypot, bruce Teswick, wrote. I think you should know because it'll make you feel better. Thinkstoffer is a two month 60 day money back guarantee for a full refund if, for any reason, you don't want it. So there's no risk at all. Now I should point out, no one in all the years we've been doing these ads and it has been many years now no one has ever asked for that refund, which tells you another thing about these things Once you get it in your network, you're happy. It makes you. You can sleep a little bit better at night. It's worth it.

Canarytools slash TWIT Offer code TWIT for 10% off for life. Put that in the how Did you Hear About Us box. Look, if you've got a network, you've got your permitted offenses. That's all well and good. You may even have total confidence in them, but wouldn't you like to have just a little bit of a warning if somebody does get in? That's what these are for. Canarytools slash TWIT the offer code TWIT. We thank thanks. They've been a sponsor of this show forever and they really do a great product, and we thank all of our listeners who are using these. We know many, many are all over the world. Now let's talk applesauce with Mr Gibson.

1:35:43 - Steve Gibson

So, in a rare occurrence, apple chose to publicly share a mildly threatening private letter it received last Wednesday, which was addressed to Apple's CEO, of course, tim Cook. The letter was from a CSAM you know, child sexual abuse material activist by the name of Sarah Gardner, and Apple must have decided that their best strategy was to get out ahead of this, since they shared Sarah's letter as the preface to theirs, which they also shared in full, and I mean shared publicly. In terms of the way the future is going to take shape, the biggest thing happening today, I think, in the public policy sphere is the debate and struggle over the tradeoff between privacy and surveillance. The devices we all now carry with us, 24 seven, are capable of providing more of either privacy or surveillance than anything ever before. Since this is a significant move on Apple's part, representing a definitive change of stance and policy, I want to share Apple's first Sarah's unsubtle letter, followed by Apple's response. So this Sarah Gardner. She wrote it to, and in the letter that was published it was redacted, but I'm sure it was written to Tim Apple. She said Dear Tim, this exact time ago, two years ago, this exact time two years ago, we were so excited that Apple, the most valuable and prestigious tech company in the world, acknowledged that child sexual abuse images and videos have no place in iCloud.