Security Now 970 Transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show.

0:00:00 - Leo Laporte

It's time for security now. Steve Gibson's here. I'm at well, not here, I'm at my mom's house, but we do have a great show. It's a propeller beanie show. This week. He's going to explain what race conditions are and why it contributes to problems like Spectre and Meltdown. We've got the latest numbers on the massive five-year-old AT&T data breach. You won't believe how many customers are affected. You'll also be curious to know who just leaked more than 340,000 social security numbers, medicaid data and more, and what you can do about it and GDPR transparency requirements. You know those cookie pop-ups. Are they honored? What do you think? We've got the deets all coming up. Next, on Security Now Podcasts you love.

0:00:49 - Steve Gibson

From people you trust. This is Twitter.

0:00:58 - Leo Laporte

This is Security Now with Steve Gibson, episode 970, recorded April 16th 2024. Ghost Race it's time for security now. The show. We cover the latest news about what's happening in the world of the Internet insecurity, bad guys, good guys, black hats, white hats. I am broadcasting from the East Coast this week. I'm at Mom's house. Steve Gibson never leaves his fortress of solitude. Hello Steve, why would I?

0:01:29 - Steve Gibson

ever leave. And Leo, this is not a green screen behind me. Oh, as Andy or was it? No, it was one of your guys on Mac Break Weekly said. No, this is not a green screen, this is yeah.

0:01:42 - Leo Laporte

Jason has the same background, whether he's real or not, and I always have to ask him. But if this were a green screen, could I do this?

0:01:54 - Steve Gibson

That's a very nice little bunny.

0:01:56 - Leo Laporte

That's a very nice bunny, I have lots of mom's tchotchkes behind me, yeah.

0:02:03 - Steve Gibson

I think I grew up with one of those clocks in my grandparents. Oh yeah, that's a, that's a classical yeah, isn't that neat with the name for it, with a big gold pendulum that.

0:02:14 - Leo Laporte

Yep, there's a steeple clock. I can't remember there is a name for it. Yeah, lots of antiques here in beautiful providence, and in fact, your own mother could be considered yes, absolutely, although she doesn't live here anymore.

She's at the home, but she's loving it. It's an assisted living facility. She loves it. She's in memory care because she's she has no ability to form memories, uh at all. You know, she can't like, she won't remember that I was there today, but she remembers everything else perfectly. Isn't that really interesting conversation? And she's jolly and happy as ever. She's not. Uh, I said, sometimes people get grumpy when they can't remember from day to day. She says no, no, it's good, I don't.

0:02:56 - Steve Gibson

Everything's fresh and new I had a one of my very good lifelong friends uh, just no longer on the planet, um, but uh, we had been driving to each other's place to go see a movie together for decades and one day he said, uh, I've lost my maps. And I said what? And he said everything's fine, he said, but apparently I had a little stroke and I don't know where anything is anymore Interesting and it was so selective, precise, I mean, it was just his mapping center was like lost, and so I said well, okay, come, I'll pick you up instead of the reverse. But it was, and that's, he said, I lost my maps. He like realized he didn't know where, how to go places interesting, but everything else was still the little vessel that burst just one little zone of the brain and that's gone.

Okay. So this week, oh boy, probably the most consistent feedback that we receive through the life of this podcast has been that our listeners love the deep technology dives and, oh, get your scuba equipment. Oh god, because in order for today's revelation to make sense, today's podcast is titled ghost race race as in race conditions and ghost as inspector. So we, what has been found by a team of researchers at AU Amsterdam and IBM is yet another problem with our. It's going to be necessary for our listeners to understand about preemptive multi-threading environments and thread concurrency and synchronization of shared objects, and that may sound like a lot, but everyone's going to be able to go oh, you know, that's so cool by the end. May sound like a lot, but everyone's going to be able to go oh, you know, that's so cool by the end of this podcast.

So, uh, we're going to uh talk about an update on that massive five-year-old at&t data breach. Uh, also, who just leaked an additional 340,000 social security numbers, Medicare data and more and what that means. I know it's just gosh, really. Our website's honoring their cookie banner notification permissions. And why do we already know the answer to that question? What surprise has the GDPR's transparency requirements just revealed? And then, after sharing a bit of feedback from our listeners, as I said, oh boy, uh, we're gonna. I'm given the feedback that I've had about what people like when we do it on this podcast. Uh, we're going to go deeper into raw, fundamental computer science technology than we have in a long time. And I'll just caution our listeners it may be inadvisable to operate any heavy equipment while listening to that portion of today's presentation.

0:06:41 - Leo Laporte

Well, I always call these and I think you do too the propeller hat episodes, and we love the propeller hat episodes. So get your beanie ready. We're going to talk about some deep stuff and, of course, our picture of the week this week, which I have not seen. So you'll get a clean take from me on this one. But first I want to tell you about our fine sponsor, the show today brought to you by Collide with a K K-O-L-I-D-E. I know you know about them because I talk about them all the time. Good news they were just acquired by 1Password. Now that's pretty big news. Both companies are leading the industry and creating security solutions that put users first.

For over a decade, Collide Device Trust has helped companies, with Okta, ensure that only known, secure devices can access that company's data, and that's what they're still doing even now as part of 1Password In fact, even better than ever, as part of 1Password. So if you use Okta and you've been meaning to check out Collide, don't hesitate. This is a perfect time to do it. Collide comes with a library of pre-built device posture checks, which you may want to use as the default, but you can also write your own custom checks, which means you can assure the security of just about anything you can think of. If you wanted to check to make sure the version of Plex on that DevOps laptop is up to date, you can do that and a whole lot more. And hey, here's some really good news you can use Collide on devices without MDM, which means your Linux fleet contractor devices, every BYOD phone and laptop in your company. That's really good news.

Now that Collide is part of 1Password, it's only going to get better. Check it out. Good news Now that collide is part of one password, it's only going to get better. Check it out. Collidecom slash security Now. Learn more. Watch the demo today. That's K O L I D E. Collidecom slash security Now. We thank him so much for the support of security. Now, and Steve and I bet you collide was thrilled to be right next to the picture of the week and I bet you Collide was thrilled to be right next to the picture of the week.

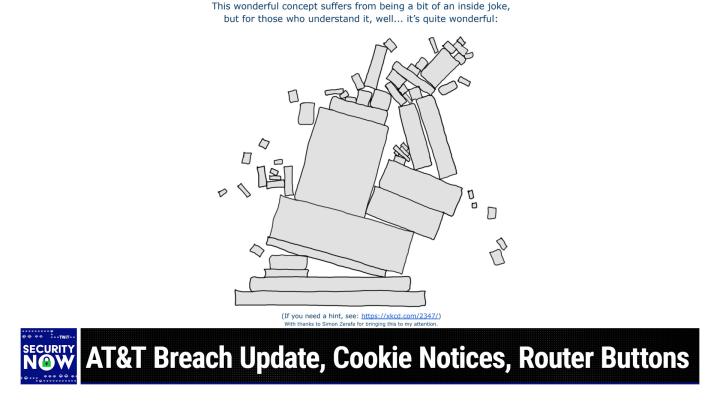

0:08:47 - Steve Gibson

It's always a fun part of the show. Okay, so this is just a wonderful picture. I gave it the headline. This Wonderful Concept Suffers from being a Bit of an Inside Joke, but for those who understand it, I get it. Well, it's quite wonderful, I get it. Well, it's quite wonderful, know, uh, thankless maintenance of some random piece of open source software upon which the entire internet's infrastructure has been erected it's a sequel to a famous xkcd cartoon xkcdcom slash 2347. Uh, I put below the picture I said.

0:09:43 - Leo Laporte

if you need a hint, see xkcdcom slash two, three, four, seven, which is a perfect little sand castle of blocks and then giant things, all relying on one little tiny brick yep, yep, and so this.

0:10:00 - Steve Gibson

So anyway, today's picture is what happens if the brick gets pulled or the maintainer retires, which has been a problem. Yeah, exactly I mean we don't know how to launch shuttles anymore because all the shuttle guys are gone. And what is like? What do you mean? I need a different kind of epoxy for this tile on the bottom of the ship.

0:10:21 - Leo Laporte

We don't know how to build spacesuits. Did you see that Nobody knows how to build those original Apollo spacesuits? They're starting from scratch, wow.

0:10:30 - Steve Gibson

Okay, so I want to follow up on our report from two weeks ago about the massive and significant AT&T data breach. Reading Bleeding Computers update, I was put in mind of the practice known as rolling disclosure, where the disclosing party successively denies the truth, only admitting to what is already known when evidence of that has been presented. The trouble here, of course, is that AT&T is a massively publicly owned well, a massive publicly owned communications carrier that holds deep details about their US consumer users and, as an example of this incident suggests that they could be acting far more responsibly and, moreover, that by not having done so for the past five years, the damage inflicted upon their own customers has likely been far worse than it needed to be. I mean, it's just, it's such bad practice, anyway, so bleeping computers reporting was headlined. At&t now says data breach impacted 51 million customers, and the annoyance in this piece's author is readily apparent. I've edited it just a bit to be more clear for the podcast, but Bleeping Computer posted this. They said AT&T is notifying 51 million former and current customers, warning them of a data breach that exposed their personal information on a hacking forum. However, the company has still not disclosed how the data was obtained. These notifications are related to the recent leak of a massive amount of AT&T customer data on the breach hacking forums that was first offered for sale for $1 million back in 2021. When threat actor Shiny Hunters first listed the AT&T data for sale in 2021, at&t told Bleeping Computer that the collection did not belong to them and that their systems had not been breached. Last month, when another threat actor known as Major Nelson I guess he's an I Dream of Jeannie fan Major Nelson leaked the entire data set on the hacking forum, a&t once again told Bleeping Computer that the data did not originate from them and that their systems had not been breached. After Bleeping Computer confirmed that the data did belong to AT&T and DirecTV accounts and TechCrunch reported, as we reported two weeks ago, that AT&T's logon passcodes were part of the data dump A little hard to deny that one AT&T finally confirmed that the data did belong to them. You know it's like, oh, that data, uh-huh Right, anyway. It's like, oh, that data, uh-huh right, anyway.

While the leak contained information from more than 70 million people, at&t now says that it impacted a total of 51,226,382 customers. So apparently they've been doing some research into that data. At&t's most recent notification states quote the exposed information varied by individual and account, but may have included full name, email address, mailing address, phone number, social security number, date of birth, at&t account number and AT&T passcode. To the best of our knowledge, personal financial information and call history were not included. Oh goody, so call history not there. Who cares? They said. Based on our investigation to date, the data appears to be from June 2019 or earlier. Appears to be from June 2019 or earlier. Bleeping Computer contacted AT&T asking why there's such a large difference in impacted customers. They said we are sending a communication to each person. That is again 51,226,382 people. Yeah, 51,226,382. Thousand three hundred and eighty two. What people? Yeah, 51 million two hundred and six thousand three hundred and eighty two wasn't it like eight or nine million when this all began?

that's right. They've uh they've done a little more research and said well, it's a little worse than we first told you, uh what 52? Million. That's the death. That's the definition of a rolling disclosure yes, so, oh yeah, it's like, oh, that data oh yeah, yeah, the ones with the pins.

0:15:36 - Leo Laporte

Oh yeah, that's right. Yeah, a little hard to deny that one. Well, they think maybe a contractor. That's the thing they don't know they're, you know.

0:15:44 - Steve Gibson

So, yeah, oh, but they said we're sending a communication to each person whose sensitive personal information was included. Some people had more than one account in the data set and others did not have sensitive personal information because bleeping computer said wait a minute. You said more than 70 million, but now you're sending notices to 51,226,382.7. Anyway, the company has still writes Bleeping Computer not disclosed how the data was stolen and why it took them almost five years to confirm that it belonged to them and to alert their customers. So, okay, I can understand that today, five years downstream, they may not know how it was stolen. You know it's at least believable that if they didn't ever look then you know they would have never found out right. Ever look then you know they would have never found out Right. But the denial of the evidence they were shown many years ago is, you know, is difficult to excuse. They were first shown this in 2001. And it does appear that it's not going to be excused, bleeping Computer said. Furthermore, the company told meaning AT&T told the main Attorney General's office that they first learned of the breach on March 26th 2024, yet Bleeping Computer first contacted AT&T about it on March 17th and the information was for sale first in 2021. While it is likely too late, as the data has been privately circulating for years, at&t is offering this is so big of them one year of identity theft protection, whatever that means, and credit monitoring services through Experian, with instructions enclosed in the notices. The enrollment deadline was set to August 30th of this year, so a few months from now. But exposed people should move faster. Yeah, all 51 million of you. To protect themselves, recipients are urged to stay vigilant, monitor their accounts and credit reports for suspicious activity and create unsolicited oh and treat unsolicited communications with elevated caution. For the admitted security lapse and the massive delay in verifying the data breach claims and informing affected customers accordingly. Not surprisingly, at&t is facing multiple class action lawsuits in the United States. Considering that the data was stolen in 2021, cyber criminals have had ample opportunity to exploit the data set and launch targeted attacks against exposed AT&T customers. However finishes bleeping computer, the data set has now been leaked to the broader cybercrime community that is no longer privately circulated. Now everybody has it the broader cybercrime community. That's right, exponentially increasing the risk for former and current AT&T customers. Okay, so just so we're clear here and so that all of our listeners, new and old, understand the nature of the risk this potentially presents to AT&T's customers.

Armed with the personal data that has been confirmed and admitted to having been disclosed specifically, social security numbers, dates of birth, physical addresses and, of course, names that is all that's needed to empower bad guys to apply for and establish new credit accounts in the names of credit-worthy individuals. This is one of the most severe consequences of what is commonly known as identity theft, and it is a true nightmare. The way this is done is that an individual's private information is used to impersonate them when a bad guy applies for credit in their name using what amounts to their identity. That bad guy then drains that freshly established credit account and disappears, leaving the individual on the hook for the debt that has just been incurred. Since anyone might do this themselves, then claim that it really wasn't them and that it must have been identity theft, your Honor, proving this wasn't really them, running like a scam of their own, can be nearly impossible, and, among other things, it can mess up someone's credit for the rest of their lives. There's only one way to stop this, which is to prevent anyone, including ourselves, from applying for and receiving any new credit by preemptively freezing our credit reports at each of the three major credit reporting agencies. I know we've covered this before at the beginning of the year in fact but it just bears repeating and we may have some new listeners who haven't already heard this, or existing listeners who intended to take this action before but never got around to it. So here's my point In this day and age of repeated, almost constant, inadvertent online information disclosure, it is no longer safe or practical for our personal credit reporting to ever be left unfrozen by default, rather than freezing our credit if we receive notification of a breach that might affect us and after all, it could take five years, you know, and 51,226,382 of AT&T's current and former customers are now being informed five years after the fact, everyone should have their credit always frozen by default, and in fact, I can see some legislation in the future where somehow this is the policy, because it's still wrong that by default it is open, but of course, all the bureaus want it to be, because that's how they make money is by selling access to our credit, which we never gave them permission to do, they just took it and then, once our credit is frozen by default, only briefly and selectively unfreezing it when a credit report does actually need to be made available for some entity to whom we wish and need to prove our credit worthiness.

Anyway, the last time I talked about this, I created a GRC shortcut link for that podcast, which was number 956. But I want to make it even easier to get to this page so you can get all the details about how to freeze your credit reports by going to grcsc credit. So just put into your browser grcsc as in shortcut, grcsc credit. That will bounce your browser over to a terrific article at Investopedia that I verified is still there, still current and still great information.

At the end of that news, another disclosure was just made and reported under the headline Hackers siphon 340,000 social security numbers from US consulting firm Associates. Gma has discovered a data breach in which hackers gained access to 341,650 social security numbers. The data breach was disclosed last week, on Friday, on Maine's government website, where the states issued data breach notifications. In its data breach warning mailed to impacted individuals, gma stated that it was targeted by an undisclosed cyber attack in May of 2023 and, quote, promptly took steps to mitigate the incident. Unfortunately, they didn't promptly, apparently disclose it until now, almost a year later. It until now, almost a year later.

Gma provides economic and litigation support to companies and government agencies in the US, including the DOJ, that are involved in civil action. According to their data breach notification, gma informed affected individuals okay, they were informed that their personal information was obtained by the US Department of Justice as part of a civil litigation matter which was supported by GMA. The purpose and target of the DOJ's civil litigation are unknown. A Justice Department representative did not return a request for comment. Gma stated that individuals that were notified of the data breach are quote not the subject of this investigation or the associated litigation matters, adding that the cyber attack quote does not impact your current Medicare benefits or coverage. We consulted with third party cybersecurity specialists to assist in our response to the incident and we notified law enforcement and the DOJ. We received confirmation of which individual's information was affected and obtained their contact addresses on February 7th 2024. So, whoops, it was almost a year before people were notified.

Gma notified victims that quote here it is your private and Medicare data was likely affected in this incident. Yeah, you got to love the choice of the word affected. You know you mean as it obtained by malicious hackers. Yeah, names, dates of birth, home addresses, some medical and health insurance information, medicare claim numbers and Social Security numbers. Finally, it remains unknown why GMA took nine months to discover the scope of the incident and notify victims.

Gma and its outside legal counsel been very active lately. Lynn Friedman of Robinson and Cole LLP did not immediately respond to a request for comment, so, as I noted above, this is now happening all the time. It is no longer safe to leave one's credit report unfrozen, and freezing it everywhere will only take a few minutes, since you don't want to be locked out of your own credit reporting afterwards. However, be sure to securely record the details you'll need when it comes time to briefly and selectively unfreeze your credit in the future. And, leo, let's take a break before we talk about cookie notice compliance or lack thereof by the way, uh, you mentioned in your credit report.

0:27:35 - Leo Laporte

You didn't give him permission, you did, but it was in the very, very fine print of that credit card agreement. Of every agreement you make, they put it in the fine print that we will submit information to the credit reporting. Ok, thank you, I'm glad you caught.

Yeah, so you did agree to it, but it's, you know. I mean, the truth is it's how the world works, because it it is a sensible system for having somebody who wants to lend you money have some way of verifying that you're a good prospect. Yep, so every time you buy a car, rent a house, get a new phone, cell phone, set up a cell phone carrier, they do that. They pull those credit reports. I freeze mine, though, and I think you're absolutely right. Everything I have is frozen and, thanks to federal law, they can't charge you to unfreeze it. They used to charge you 35 bucks and in some states it was more to unfreeze it. They can't do that.

0:28:30 - Steve Gibson

And to add confusion, there's also the term lock, which does not mean the same as freeze, so it's freezing your, your report, not locking your report Freeze it don't lock it. Yep.

0:28:45 - Leo Laporte

There were some good show titles in there, but I know you already have one someday, so I won't. I won't belabor it, but freeze it, don't lock. It would be a good show title. Uh, I'll tell you what. We do have a very good advertiser bringing you this show.

This portion of security now is brought to you by. Get ready, I know, you know, you know them Bitwarden. We love Bitwarden. In fact, both Steve and I, after a certain other company let us down, shall we say switched over to Bitwarden. It's a painless move and it is a great move and it's one I have not regretted. I don't know about you, steve, but I am a very happy Bitwarden customer.

It's the only open-source password manager that works at home, at the office, on the go, on every device you've got. It is cost effective, in fact, for many of you it's free and it can seriously improve your chances of staying safe online. The open source part is, I think, really important. I've said it is a personal belief, but I've said many times I don't trust any encryption software that's not open source and I want them to use open source tools that are well-known, time-tested, that have been vetted by security experts. That's what Bitwarden does. It's using open source crypto and it's using the best practices. For instance, unlike many other password managers, it doesn't use PBKDF2 to hash your passwords. The password key derivative function, I mean you can use it, but it offers thanks because it's open source. And it was one of our listeners, quexon, who wrote two implementations for Bitwarden of much better derivative functions. One is Argon2, one is S-Crypt. After consultation, after approval, after looking at the code, bitwarden said yep, we're going to offer Argon2. This all happened within a matter of a month or two of you talking about it, and now Argon2 is available. I immediately switched over to it. Just one more way that Bitwarden is a better solution.

They just added a new feature. I am loving it. I use it on all my browsers. It's called inline autofill and now it's so easy. When you get to a website, you click in the password field or the login field and you'll get a dropdown that shows you the passwords you've stored for that site. You click it, it logs in username and password and you're done. Yeah, it's so convenient, but I want to tell you about it because you have to turn it on. It is a new feature, so if you're an existing user, you have to go to the settings menu in the browser extension and select autofill and then in that box there you'll have some choices you want to choose. Show autofill menu on form fields. I now can't live without it. To be honest with you, it just makes it so much easier.

It also supports passkeys. Really well, I turn on passkeys. Instead of having my iPhone or my computer store those passkeys, I use Bitwarden. It's secure, it stores them and it's everywhere I am. So now my passkey for GitHub and Google and many other sites actually resides on all of my devices through Bitwarden. That's a real win.

Named by Wired, is best for most people. Honored by Fast Company as one of the 2023 brands that matter in security, bitwarden is the open source password manager trusted by millions. Now I want to tell you the good part. You can get started with Bitwarden's free trial of a Teams or Enterprise plan or, if you're an individual in fact, everybody listening should do this you can set up an account for free as an individual user, forever. This isn't one that's going to say, step back later and say, oh no, we don't want to because they're open source. They can't, so it's free forever.

Unlimited passwords. Passkey support yes. Yubikey support yes, it does it all. I really love Bitwarden. You will too. Bitwardencom slash twit. Look, I know, if you listen to the show you're using a password manager. Fine, but when people your friends and family say, no, no, it's too complicated, or they say, oh, it costs too much, tell them about Bitwarden it's easy to use, it costs nothing. Bitwardencom slash Twitter. We thank them so much for their support of the good work, the important work Steve's doing at security. Now. Thank you, bitwarden. Back to you.

0:33:03 - Steve Gibson

Steve. Okay. So the following very interesting research was originally slated to be this week's major discussion topic, but after I spent some time looking into the recently revealed ghost race problem, I want to talk about that instead, since it brings in some very cool fundamental computer science about the problem of race conditions which, interestingly, in our 20 years of this podcast well, we're in our 20th year we've never touched on race conditions, so we're going to resolve that today. But what this team of five guys from ETH Zurich discovered was very interesting too. So here's that they began asking themselves when we go to a website that presents us with what has now become the rather generic and GDPR required cookie permission pop ups, do sites where permission is not explicitly granted and cookie use is explicitly denied actually honor that denial? And with that open question on the table, the follow-up question was can we arrange to create an automated process to obtain demographic information about the cookie permission handling of the top 100,000 websites? Well, we already know the answer to the second question. That's yes. They figured out how to automate this data collection and the results of their research will be presented during the 33rd USENIX Security Symposium, which will take place this coming August 14th through the 16th in Philadelphia. Their paper is titled Automated Large-Scale Analysis of Cookie Notice Compliance and for anyone who's interested, I have a link to their PDF research and the presentation pre-pub in the show notes. Perhaps it won't come as any shock that what they found was somewhat disappointing. Did they find that 5% of the 97,090 websites they surveyed did not obey the site's visitors' explicit denial of permission to store privacy-invading cookies? No, was the number 10%? Nope, not that either. How about 15? Nope, still too low, believe it or not. 65.4% of all websites tested, so just shy of fully two-thirds of all websites tested. Two of websites do not respect users' negative consent and that top-ranked websites are more likely to ignore users' choice, despite having seemingly more compliant cookie notices.

Cookie notices the abstract of their paper says privacy regulations such as the General Data Protection Regulation, gdpr, require websites to inform EU-based users about non-essential data collection and to request their consent to this practice. Previous studies have documented widespread violations of these regulations. However, these studies provide a limited view of the general compliance picture. They're either restricted to a subset of notice types, detect only simple violations using prescribed patterns, or analyze notices manually. Thus, they're restricted both in their scope and in their ability to analyze violations at scale. We present the first general, automated, large-scale analysis of cookie notice compliance. Our method interacts with cookie notices, in other words, by navigating through their settings, it observes declared processing purposes and available consent options, using natural language processing, and compares them to the actual use of cookies. By virtue of the generality and scale of our analysis, we correct for the selection bias present in previous studies focusing on specific content management platforms. We also provide a more general view of the overall compliance picture using a set of 97,000 websites popular in the EU. We report, in particular, that 65.4% of websites offeringa cookie rejection option likely collect user data despite explicit negative consent.

Okay, so I suppose we should not be surprised to learn that what's hiding behind the curtain is not what we would hope. Two thirds of companies in general the larger they are, the worse is their behavior are simply blowing off their visitors explicit requests for privacy. And this is in the EU, where the regulation is present and, you know, would be more likely to be strongly enforced. Except who would know if this was being, if the regulation was being ignored, unless you checked by the presence of these cookie permission banners? But two out of every three required do-nothing clicks. When we say we want you not to do this, they have no actual effect.

The researchers discovered additional unwanted behavior, which they termed dark patterns. Specifically, 32% are missing the notice entirely. 56.7% don't have a reject button, so they display the notice but they don't give you the required option to opt out. And then there's those 65.4% that do have the button but they ignore it. Then there's 73.4% that do have the button but they ignore it. Then there's 73.4% with implicit consent prior to interaction, meaning they assume you are consenting and they do things in 73.4% of the cases prior to giving you the option to not have them do that.

Of the cases prior to giving you the option to not have them do that, there's a category implicit consent after close. That's there in 77.5% of the instances. Undeclared purposes in 26.1. So they don't tell you what's going on. There's interference in two, two thirds of the cases 67.7 percent and a forced action in almost half 46.5.

So, bottom line, you know this is all a mess. What this means is that the true enforcement of our privacy cannot be left to lawmakers and their legislation, nor even to websites. New technology needs to be brought to bear to take this out of the hands entirely of third parties, and I know it seems insane for me to be saying this, but this really is what Google's privacy sandbox has been designed to do. And, as with all change, advertisers and websites are going to be kicking and screaming and they're not going to go down without a fight. But the advertisers are also, reluctantly, currently in the process of upgrading their ad delivery architectures for the privacy sandbox, because they know that, with Chrome commanding two-thirds market share, they're going to have to work within this brave new world that's coming soon, you know, and real soon, as in later this year. Google's not messing around this time. They've given everyone years of notice. Change is coming, and I say thank goodness because you know it's obvious that just saying, oh, you know, you've got to get permission, well, looks like websites most of them are asking for permission. It turns out two thirds of them are ignoring it when we say no.

And speaking of the GDPR, which created, you know, this accursed cookie pop-up legislation which currently plagues the Internet, holding to the, there's two sides to every coin rule the requirements for disclosure that's also part of the GDPR. Legislation occasionally produces some startling revelations when conduct that parties would have doubtless much preferred to remain off the radar are required to be seen. Get a load of this one. In this case, we have the new Outlook app for Windows. Thanks to the GDPR, users in Europe who download the Outlook for Windows app will be greeted with a modal pop-up dialog that displays a user agreement and requires its users' consent. So far, so good. The breathtaking aspect of this is that the dialogue starts right out stating we and 772 third parties process data to, store and or access information on your device.

Only 700, huh 772 third parties that Microsoft is sharing their users' data with. Our computers are slow, Good Lord Wow. To develop and improve products, personalize ads and content, measure ads and content, derive audience insights. Obtain precise geolocation data yes, we know right where you are and we're telling everyone and identify users through device scanning. And no, that was not a typo. It says we and 772 third parties. At least they're honest, I mean. Well, they have no choice right In the EU, you?

know, I don't know what to think about that. You know it's mind boggling. For one thing, it's disappointing that only those in the EU get to see this. Us regulators are not forcing the same transparency requirements upon Microsoft, and Microsoft certainly doesn't want to show any more of this than they're forced to. You know, earlier we were talking about the confidentiality of our data, and the challenge that presents Having Microsoft profiting from the sale of the contents of its users' email seems annoying enough. At the same time, you know, there's Gmail, and they're doing some of that too. But when there are, by Microsoft's own forced admission, 772 such third parties, who all presumably receive paid access to this data, you know, doesn't it feel as though Microsoft should be paying us for the privilege of access to our private communications, which is apparently so valuable to them? Instead, we get free email, whoopee.

0:45:47 - Leo Laporte

Wow. Does Google do anything like that with Gmail? It's got to be. No, I've never.

0:45:52 - Steve Gibson

Whoa whoa you mean in the EU. That's a good question, whether they have had to start that too, yeah, we should.

0:46:00 - Leo Laporte

I think it's the case, although I'm not a lawyer, that this is in the EU, all of this, that even though we all in the US and everywhere else in the world see these cookie monster announcements, they't they're not technically required to do them for us it's, it's only for you people well it?

0:46:18 - Steve Gibson

as I understand it, it's if an eu person visits your website.

0:46:22 - Leo Laporte

No, it's still not liable because it has to be a company doing well, I don't know. That's a good question. There was a very interesting piece which I've mentioned on other shows. Uh, and of course the guy's not an internet lawyer either uh, blog piece that said no one has to do this except eu companies. They're the only companies liable for this he's, and he points out amazon does not right. Interesting, you've never seen it on amazon. Uh, you don't see it on a lot of sites, not mine. I don't see cookies on Google sites come to think of it.

0:46:56 - Steve Gibson

GRC doesn't have it, although I'm not collecting any information and using cookies that way.

0:47:00 - Leo Laporte

Well, we do it on Twit, because there's a whole secondary business of people who go around saying you better do this for compliance and pay me and I'll do it for you. And they said otherwise you're going to be in trouble. So we have a lot of privacy stuff on our site. We don't, you can't, log into our site, but we, as every site does, we, we have cookies, we have loggers, you know. I mean I do it on my personal website. I say look, the only cookie I have is whether you like the light mode or dark mode. So so suck it if you don't like that, too bad. I mean, that's why this whole thing is so silly.

0:47:39 - Steve Gibson

I just it's so well, it's probably going to go away because if google has their way, maybe and google generally gets their way you know they've got the demographic, the market share. Um it. They really are terminating third-party cookies.

0:47:55 - Leo Laporte

We've done this story before because, remember, you talked about do not track, which is in the spec. Everybody has it, nobody honors it. It's coming back. I thought it would be nice to see. Yeah, yeah.

0:48:06 - Steve Gibson

Well, and actually, again, what's happening is that the next generation technology will not track by siloing each site. The tracking happens because traditionally, browsers have had one big cookie jar. As soon as you start creating individual per domain cookie jars, then each domain is welcome to have its own local set of cookies, but they're not visible from any other domain and that terminates tracking, unfortunately. That's why we're now doing the oh please, join our email. You know, join our community, give us your email and you know. And so, basically, first party cookies are being used to track through the browser. So, okay, we have a bit of feedback from our listeners and then, boy, have we got a fun techie episode. Okay, let's see. I got at SGGRC.

Been hearing you talk about the physical input requirement for security setting modifications, meaning they'll like push the button on the browser if you want to make a security change, and that keeps somebody in Russia from being able to push a button on your browser.

This person said just wanted to mention Fritz and Fritzbox, a common German home router manufacturer who's been doing this for a while, and he said annoying, yes, effective Also yes. So you know, when we've talked about this previously, I haven't mentioned that many Soho routers probably everyone's router, if it's at all recent have that dedicated WPS hardware button. That is a physical button, for essentially the same reason. It allows a network device to be persistently connected to a router without the need to provide the device with the router by pressing the button. So you know, the notion of doing something similar to similarly protect configuration changes makes a lot of sense. But then another listener suggested this was Rob Woodruff, who tweeted. He said I'm listening to the part of SN969 where you're talking about the five security best practices and it occurs to me that, regarding the requirement for a physical button press, it won't be long before we start seeing cheap Chinese made Wi-Fi enabled IOT button pressers on Amazon. What could possibly go wrong?

And they exist by the wayacy ingebotham, a little thing that presses a button you can.

0:50:59 - Leo Laporte

Yeah, it's a, it's a wi-fi enabled little finger that goes like this oh, and for lights, that don't, you can't change or whatever. The uh, yeah, yeah, so it exists too. I don't know if it would work with that wps type button, but yeah, uh yeah, actually I think I remember watching that this week in google.

0:51:19 - Steve Gibson

When you guys talked about that, I thought that was jesse used them. Wow, she liked them. Remember that thing when we were growing up the little black box that you stick a penny on and the hand would like would reach out and grab the penny right, yeah, but my favorite one, though, is there's there are varieties of these.

0:51:38 - Leo Laporte

You flip the switch, the hand comes out and turns the switch off yes yes okay.

0:51:46 - Steve Gibson

So the downside to the physical button pressure requirement, you know, obviously, is its inherent inconvenience, you know. But that's also the source of its security. You know, it would be nice if we could have the security without the inconvenience, but it's unclear how we could go about doing that. But to Rob's point, even if a user chose to employ a third party remote control control button presser, that would still provide greater security than having no button at all. For one thing it would be necessary to hack two very different systems. You know, kind of like having multi-factor authentication. But the most interesting observation, I think, is that generic remote attacks against an entire class of devices that were known to be button protected would never even be launched in the first place because it would be known that they could not succeed. So even a router that technically breaks the rules with an automated button presser gains the benefit of what is essentially herd immunity, because you know attackers wouldn't bother attacking that model router because they're unattackable thanks to having the physical button. Rami Vespami tweeted at SGGRC I don't see the benefit if net sec fish kept quiet. So this person's talking about that premature. Well, the disclosure of the incredible amount of old D-Link NAS devices, so they write this would have been quietly exploited in perpetuity. Instead, now everyone knows to deprecate those devices and the issue has an end in sight. Better to know, okay, if indeed current D-Link NAS owners could have been notified as a consequence of NetSecFish's disclosure on GitHub, then okay maybe, but they still would have had to act quickly, since the attacks against these D-Link NAS boxes required less than two weeks to begin. But the problem is that nothing about NetSecFish's disclosure on GitHub suggests that even a single end user of these older but still in use D-Link NAS devices would have ever received notification, notification from whom you know he posted this on GitHub. That's not notifying all those D-Link NAS end users. You know why. Would any random user know what's been posted in someone's GitHub account? D-link said sorry, but those are old devices for which we no longer accept any responsibility. But we know who does know what's been posted to someone's random GitHub account, since it took less than two weeks for the attacks to begin. So I would still argue that it is not better to know, when it's by far predominantly bad guys, who will be paying attention and who will be doing the knowing. And finally, john Moriarty wins the Feedback of the Week award.

He tweeted catching up on last week's Security Now and hearing at SGGRC lament the fans spinning up and resources being swamped by Google's Chrome bloat, while it was apparently doing nothing. Perhaps it was busy running and completing ad auctions? Oh, so yes, indeed, it's quite true that our browsers are going to be much busier once responsibility for ad selection has been turned over to them, although you know, our computers are so overpowered now it's ridiculous. You know, using a browser shouldn't even wake the computer up. I don't have any big Spinrite news this week. Everything continues to go well and I'm working to update GRC's Spinrite pages just enough to hold us while I get email running and then back over to work on Spinrite's documentation. I've been seeing more good reports of SSD original performance recovery recovery.

While catching up in Twitter, I did run across a good question about Spinrite Paul tweeting from Mason Paul AK. He said Hi, steve, longtime podcast listener and Spinrite owner, thanks for the many gems of info over the years. Boy, we got a good one for this podcast coming up Over the weekend. He said I saw the RAM test in Spinrite 6.1. Please would you give some insight into how long I should let it run to test one gigabyte, and am I wearing it out as writing to an SSD would? Thanks, paul. I don't think I remember to respond to the wearing it out question. No, dram, absolutely thank goodness, does not wear out when it's being read and or written. Okay so, but to his question of how long you should run it, the best answer to this is really quite frustrating, because the best answer is the longer the better you know Now. That said, I can clarify a couple of points. The total amount of RAM in a system has no bearing on this, since Spinrite is only testing the specific 54 megs that it will be using once the testing is over.

While the testing is underway, a test counter is spinning upwards on the screen and a total elapsed testing time is shown For each test. It fills 54 megabytes with a random pattern, then comes back to reread and verify that memory was stored correctly. So the longer the testing runs, the greater the likelihood that it will find a pattern that causes some trouble. The confusion and Paul's question comes from the fact that the new startup RAM test can be interrupted. It doesn't stop by itself, it just goes, and eventually the user is going to interrupt it in order to get going with Spinrite. So when should that be? Best practice, if it's feasible, would be for any new machine where Spinrite 6.1 has never been run before, to start it up and allow it to just run on that machine overnight, doing nothing but testing its RAM In the morning. The screen will almost certainly still be happy and still showing zero errors. I mean, that's by far the most likely case. But the screen might have turned red, indicating that one or more verification passes failed.

After I asked that first guy who was having weird problems, that made no sense to try running memtest86, the venerable, you know full system RAM test. That's been around forever. It reported errors. So I decided I needed to build that into Spinrite just to be safe. Users do not need to use it at all, but it's there. And several other of Spinrite's early testers were surprised to have Spinrite detect previously unsuspected memory errors on some of their machines previously unsuspected memory errors on some of their machines. So Spinrite's built-in RAM memory test is sort of useful all by itself just for that. But once it's been run for a few hours on any given machine, then it could just be bypassed quickly from then on and you don't need to worry about it. Okay, we still have two sponsors to go. Let's do do one now, and then I will take a break, because, boy, we're going to need a break in the middle of where we're, of where we're going next?

1:00:20 - Leo Laporte

I think so. Uh, I'm glad to say, our show today brought to you by vanta, your single platform for continuously monitoring your controls, reporting on your security posture and and streamlining audit readiness. When it comes to ensuring your company has top-notch security practices, things can get complicated very fast. With Vanta, you can automate compliance for in-demand frameworks like SOC 2 or ISO 27001 or HIPAA. Even more, vanta's market-leading trust management platform enables you to unify security program management with a built-in risk register and reporting, and streamline security reviews with AI-powered security questionnaires.

G2 loves Vanta year after year. Check out this review from a chief information officer. Quote Vanta's indispensable in achieving and maintaining compliance and best security practices. The platform's automated processes significantly reduce the manual workload associated with compliance tasks. Over 7,000 fast-growing companies like Atlassian, flowhealth and Quora use Vanta to manage risk and prove security in real time. Watch Vanta's on-demand demo. You'll find it at vantacom slash security Now. That's V-A-N-T-A dot com slash security now. Learn more at vantacom security now. We thank vanta so much for supporting us and the work steve does very important work steve does to keep us all safe, just like vanta does.

1:01:54 - Steve Gibson

All right on, we go with the show, steve okay, so if anybody is doing brain surgery right now, driving a large truck yes, close the skull, put down your, put down your tools, pull over.

Yes. The first question to answer is what's a race condition? A race condition is any situation which might occur in a hardware or software systems where outcome uncertainty or an outright bug is created when the sequence of closely timed events, in other words a race among events, determines the effects of those events. For example, in digital electronics it is often the case that the state of a set of data lines is captured when a clock signal occurs. This is typically known as latching the data lines on a clock. But for this to work reliably, the data lines need to be stable and settled when the clocking occurs. If the data lines are still changing state when the clock occurs, or if the clock occurs too soon, a race condition can occur where the data that's latched is incorrect. Here's how Wikipedia defines the term they write a race condition or race hazard is the condition of an electronics, software or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events leading to unexpected or inconsistent results. It becomes a bug when one or more of the possible behaviors is undesirable. The term race condition was already in use by 1954. For example, in David A Huffman's doctoral thesis, quote the synthesis of sequential switching circuits. Unquote. Okay, issue with modern software systems because our machines now have so many things going on at once where the independent actions of individual threads of execution must be synchronized. Any failure in that synchronization can result in bugs or even serious security vulnerabilities. To understand this fully, we need to switch into computer science mode. Can result in bugs or even serious security vulnerabilities. To understand this fully, we need to switch into computer science mode. And, as I said at the top of the show, I often receive feedback from our listeners wishing that I did more of this, but I don't often encounter such a perfect opportunity as we have today.

Back when we were talking about how processors work during one of our early sets of podcasts on that topic, we talked about the concepts of multi-threading and multitasking In a preemptive multi-threaded environment. Multi-threaded meaning multiple threads of execution happening simultaneously. The concept of a thread of execution is an abstraction. The examples I'm going to draw assume a single core system, but they apply equally to multi-core systems. When a processor is busily executing code, performing some task, we call that a thread of execution, since the processor is threading its way through the system's code, executing one instruction after another.

After a certain amount of time a hardware interrupt event occurs when a system clock ticks. This interrupts that thread of execution, causing it to be suspended and control to be returned to. The operating system sees that this thread has been running long enough and that there are other waiting threads. The OS decides to give some other thread a turn to run. So it does something known as a context switch. A thread's context is the entire state of execution at any moment in time, so it's all of the registers the thread is working with and any other thread-specific volatile information. The operating system saves all of that thread context, then reloads another thread's saved context, which is to say everything that had been previously saved from that other thread when it was suspended. The OS then jumps to the point in code where that other thread was rudely interrupted the last time it was preempted and that other thread resumes running just as though nothing had ever happened. And that's a critical point From the viewpoint of the many threads all running in the system.

The threads themselves are completely oblivious to all of this going on above them. As far as they're concerned, they're just running and running and running without interruption, as if each of them were all alone in the system. The fact that there's an overlord frantically switching among them is unseen by the threads. I just opened Task Manager on my Windows 10 machine. I just opened Task Manager on my Windows 10 machine. It shows that there are 2,836 individual threads that have been created and are in some state of execution right at this moment. That's a busy system.

Okay, so now let's dive deeper into very cool and very important coding technology. The reason for this dive will immediately become clear once we look at the vulnerabilities that the researchers from VU, Amsterdam and IBM have uncovered in all contemporary processor architectures not just Intel, this time, or AMD, also ARM and RISC-V Any architecture that incorporates get ready for it speculative execution technology, as all modern processor architectures do. Okay, imagine that two different threads running in the same system share access to some object. Anyone who's been around computing for long will have heard the term object-oriented programming. An object is another abstraction that can mean different things in different contexts, but what's important is that it's a way of thinking about and conceptually organizing complex systems that often have many moving parts. So when I use the term object, I'm just referring to something as a unit. It might be a single word in memory, or it might be a large allocation of memory, or it could be a network interface whatever.

In the examples we'll be using, our object of interest is just a word of RAM memory that stores a count of events. So this object is a simple counter and in our system various threads might, among other things, have the task of incrementing this counter from time to time. So let's closely examine two of those threads. Imagine that the first thread decides it wishes to increment the count. So it reads the counter's current value from memory into a register. It might examine it to make sure that it has not hit some maximum count value. Examine it to make sure that it has not hit some maximum count value. Then it increments the value in the register and stores it back out into the counter memory. No problem, the shared value in memory was incremented. But remember that in our hypothetical system there are at least two separate threads that might be able to do this.

So now imagine a different scenario. Just like the first time, the first thread reads the current counter value from main memory into one of its registers. It examines its value and sees that it's okay to increment the count. But just at that instance, at that instant, the system's thread interrupt occurs and that first thread's operation is paused to give other threads the opportunity to run. And, as it happens, that other thread that may also decide to increment that counter decides that it wishes to do so, that it wishes to do so. So it reads that shared value from memory, examines it, increments its register and saves it back out to memory. And, as luck would have it, for whatever reason, while that second thread is still running, it does that four more times. So the counter in memory was counted up by a total of five times. Then, after a while, that second thread's time is up and the operating system suspends it to give other threads a chance to run.

Eventually the operating system resumes our first thread from the exact point where it left off. Remember that before it was paused by the operating system, it had already read the counter's value from memory, examined it and decided that it should be incremented. So it increments the value in its register and stores it back out to memory, just as it did the first time. But look what just happened. Those five counter increments that were performed by the second thread, which shares access to the counter with the first thread were all lost. The first thread had read the counter's value into a register. Then, while it was suspended by the operating system, the second thread came along and incremented that shared storage five times. But when the first thread was resumed it stored the incremented counter value that it had previously saved back out, thus overriding the changes made by the second thread. Overriding the changes made by the second thread. This is a classic and clear example of a race condition in software. It occurred because of the one in a million chance that the first thread would be preempted at the exact instant between instructions, when it had made a copy of some shared data that another thread might also modify and it hadn't yet written it back to the shared memory location.

The picture I painted was deliberately clear because I wanted to highlight the problem. But in the real world it doesn't happen that way and these sorts of race condition problems are so easy to miss. Imagine that two different programmers working on the same project were each responsible for their piece of code, code that might also choose to increment that counter. This would introduce an incredibly subtle and difficult to ever find bug. Normally everything would work perfectly, the system's tested, everything's great, but then, every once in a blue moon, the code, which looks perfect, would not do what it was supposed to do, and the nature of the bug is so subtle that coders could stare at their code endlessly without ever realizing what was going on. Last Tuesday was Patch Tuesday, and Microsoft delighted us all by repairing another 149 bugs, which included two that created vulnerabilities which were under active exploitation at the time. Microsoft software does not appear to be in any danger of running out of bugs to fix, and race conditions are one of the major reasons for that. How many times have we talked about so-called use-after-free bugs, where malicious code retains a pointer to some memory that was released back to the system and is then able to use that pointer for malicious purposes? More often than not, those are deliberately leveraged race condition bugs.

Okay, so now the question is, as computer science people, how do we solve this race condition problem? The answer is both tricky and gratifyingly elegant. We first introduced the concept of ownership, where an object can be owned by only one thread at a time, and only the current owner of an object has permission to modify its value. To modify its value In the example I've painted, the race condition occurred because two threads were both attempting to alter the same object, the shared counter, at the same time. If either thread were forced to obtain exclusive ownership of the counter until it was finished with it, the competing thread might need to wait until the other thread had released its ownership, but this would completely eliminate the race condition bug. Okay, so at the code level, how do we handle ownership?

We could observe that modern operating systems typically provide a group of operations known as synchronization primitives. They're called this because they can be used to synchronize the execution of multiple threads that are competing for access to shared resources. To shared resources. The primitive that would be used to solve a problem like this one is known as a mutex, which is short for mutual exclusion, meaning that threads are mutually excluded from having simultaneous access to a shared object. So, by using an operating system's built-in mutex function, a thread would ask the operating system to give it exclusive ownership of an object and be willing to wait for it to become available. If the object were not currently owned, then it would become owned by that first requesting thread, and that owning thread could do whatever it wished with the object. Once it was finished, it would release its ownership, thus making the object available to another thread that may have been waiting for it.

But I said that what actually happens is both tricky and gratifyingly elegant, and we haven't seen that part yet. How is a mutex actually implemented? How is a mutex actually implemented? Our threads are living in an environment where anything and everything that they are doing might be preempted at any time without any warning. This literally happens in between instructions, between one instruction and the next instruction, and in between any two instructions. Anything else might have transpired. If you think about that for a moment, you'll realize that this means that acquiring ownership of an object cannot require the use of multiple instructions that might be interrupted by the operating system at any point. What we need is a single, indivisible instruction that allows our threads to attempt to acquire ownership of a shared object while also determining whether they succeeded, and that has to happen all at once. It is so cool that an instruction as simple as exchange can provide this entire service.

The exchange instruction does what its name suggests. It exchanges the contents of two things. These might be two registers whose contents are exchanged, or it might be a location in memory whose contents are exchanged with a register. We already have a counter, the ownership of which is the entire issue. So we create another variable in memory to manage that counter's ownership, to represent whether or not the counter is currently owned by any thread. We don't need to care which thread owns the counter, because if any thread does, no other thread can touch it. All they can do is wait for the object's ownership to be released. So we just need some binary, you know, a one or a zero. If the value of this counter ownership variable is zero, then the counter is not owned by any thread at the moment, and if the counter ownership variable is not zero if it's a one, then some thread currently at the moment. And if the counter ownership variable is not zero if it's a one, then some thread currently owns the counter.

Okay, so how do we work with this and the exchange instruction? When a thread wants to take ownership of the counter, it places a one in a register and exchanges that register's value with the current value of the ownership variable in memory. Is a zero, then that means that until the exchange was made, the counter was not owned by any thread, and since this exchange simultaneously placed a one into the ownership variable at the same time, with the same instruction, our thread is now the exclusive owner of the counter and is free to do anything it wishes without fear of any collision. If another thread comes along and wants to take ownership of the counter, it also places a 1 in a register and exchanges that with the counter's ownership variable. But since the counter is still owned by the first thread, the second thread will get a one back in its exchanged register that tells it that the counter was already owned by some other thread and that it's going to need to wait and try again later and notice that, since what it swapped into the ownership variable was also a one, this exchange instruction did not change the ownership of the counter. It was owned by someone else before and it still is. What the exchange instruction did in this instance was to allow a thread to query the ownership of the counter If it was not previously owned. It now would be by that query, but if it was previously owned by some other thread it would still be. And finally, once the thread that had first obtained exclusive ownership of the counter is finished with the counter and is ready to release its ownership, it simply stores a zero into the counter's ownership variable in memory, thus marking the counter as currently unowned. This means that the next thread to come along and swap a one into the ownership variable will get a zero back and know that it now owns the counter.

We call the exchange instruction, when it's used like this, an atomic operation, because like an atom, it is indivisible. With that single instruction, we both acquire ownership if the object was free, while also obtaining the object's previous ownership status. Okay, I suppose I'm weird, but this is why I love coding so much. The holistic way of viewing this is to appreciate that only atomic operations are safe in a preemptively multi-threaded environment. In our example, if the counter in question only needed to be incremented by multiple threads and that incrementation could be performed by a single, indivisible instruction, then that would have been thread safe, which is the term used. And there are instructions that increment memory in a single instruction.

But in our example, the threads needed to examine the current value of the counter to make a decision about whether it had hit its maximum value yet, and only then incremented. That required multiple instructions and was not thread safe. So what do we do? We protect a collection of non-thread safe operations with a thread safe operation. We create the abstraction of ownership and use an exchange instruction which being one instruction is thread safe to protect a collection of operations that are not thread safe. And this brings us to ghost race and the question what would it mean if it were discovered that these critical, supposedly atomic and indivisible synchronization operations were not, after all, guaranteed to do what we thought? And, leo, let's tell our listeners of our last sponsor, and then we're going to answer that question, because the bottom just fell out of computer security.

1:25:31 - Leo Laporte

I guess you could have a race for the lock right. That would be part of it. That's why you need to make them atomic operations right. You can have races all the way down races all the way down.

1:25:45 - Steve Gibson

Well, you can't have a race for the lock or for the ownership, because only actually one instruction is executing at any time. It feels like multiple things are going on, but actually, as we know, the processor is just switching among threads, so at any given time there's only one thing happening, and if that one thing grabs the lock, if it grabs ownership, then it has it Right, and that's the elegance of being able to do something in a single instruction, right If it's multiple instructions, then you've got a problem.

1:26:17 - Leo Laporte

If it's a resource that takes, you know, three cycles to pull, then you have the problem of you could have it interrupt, exactly. Yeah, it's really a fascinating ecosystem that's evolved. And this, actually this happened even before there were multi-threaded computers. Right, I mean you could have a race condition anytime you have a branch, I guess.

1:26:39 - Steve Gibson

Well, anytime you have time sharing. Basically, it's a matter of contention Whenever there are multiple things contending for a single resource, they're all free to read it at once. Right, Everybody can read it. But if anybody modifies it, then you're in trouble, Right?

1:27:01 - Leo Laporte

Fascinating. We're going to get to the big race in just a bit, but first a word from Big Think. One big thing Most midsize high growth organizations Look, you got a job. You got to focus on what you do, your core offerings. You don't have the time, the volume of work, the resources to keep a full-time privacy and AI team busy. We don't. A lot of small, lot of mid, small and mid-sized companies couldn't. And then there's also the issue of attracting and affording top talent. You're competing against google and facebook and microsoft. It's tough. It's a solution. One big think privacy and ai compliance are here to stay. This, this is just the world we live in. Table stakes Regulations around the world are in constant flux.

It's pretty clear. New regulations are coming out every day. Organizations are forced to embrace concepts like privacy by design, transparency, purpose limitation. Have you heard these? Data minimization and data subject rights. We talk about that all the time. With one big things services, organizations can gain the capacity and abilities of a dpo, a very your very own data protection officer and an ai expert, giving you all of the above at a fraction of the cost, while maintaining independence requirements.

A DPO is an enterprise security leadership role responsible for overseeing data protection strategy compliance and implementation to ensure compliance for GDPR and CCPA and CPRA and so on. A DPO's role could include informing and advising the company and employees of their data protection obligations and compliance requirements. Obviously for that stat you told us earlier. Steve from ETH shows a lot of companies need some help on doing that right. On complying, your DPO also serves as the primary point between the company and the relevant supervisory authorities. Actually, that's just a fraction of what the dpo does. So you know you need one. Can you afford one? Well, there's a great way to give your organization sustainable privacy and ai compliance. Go to one big thinkcom that's the number one, b-i-g-t-h-i-n-k. Dot com. One big thinkcom. They're here to help you solve these ai and privacy compliance problems. One big thinkcom. We thank him so much for supporting steve and the work he does. Now back to the big race okay.

1:29:41 - Steve Gibson

So for their papers abstract, the researchers wrote race conditions arise when multiple threads attempt to access a shared resource without proper synchronization, often leading to vulnerabilities such as concurrent use-after-free. To mitigate their occurrence, operating systems rely on synchronization primitives such as mutexes, spinlocks, etc. In this paper we present GhostRace, the first security analysis of these primitives on speculatively executed code paths. Our key finding is that all of the common synchronization primitives can be microarchitecturally bypassed on speculative paths, turning all architecturally race-free critical regions into speculative race conditions or SRCs. To study the severity of SRCs these speculative race conditions, they wrote, we focus on speculative concurrent use after free and uncover 1,283 potentially exploitable instances in the Linux kernel. Moreover, we demonstrate that what they abbreviate as SCUAF Speculative Concurrent Use After Free Information Disclosure Attacks against the kernel are not only practical, but that their reliability can closely match that of traditional specter attacks, with our proof-of-concept leaking kernel memory at 12 kilobytes per second. Crucially, we develop a new technique to create an unbounded race window accommodating an arbitrary number of speculative conditional, rather concurrent, use-after-free invocations required by an end-to-end attack in a single race window. To address the new attack surface, we propose a generic SRC mitigation, and we'll see about how expensive that is in a minute to harden all the affected synchronization primitives. On Linux. Our mitigation requires minimal kernel changes but incurs around a 5% geometric mean performance overhead on LM bench.

And then they finish their abstract by quoting Linus Torvalds on the topic of speculative race conditions, saying, quote there's security and then there's just being ridiculous. Ok, well, it may not be so ridiculous after all. What this comes down to is a mistake that's been made in the implementation of current operating system synchronization primitives. In an environment where processors are speculating about their own future, somewhere in their implementations is a conditional branch that's responsible for making the synchronization decision. And in speculatively executing processors it's possible to deliberately mistrain the speculations branch predictor to effectively neuter the synchronization primitive, as they say in their paper, to turn it into a no-, op, which is the programmer shorthand for no operation. And if that can be done, it's possible to wreak havoc. Here's what they explained. They said since the discovery of Spectre, security researchers have been scrambling to locate all the exploitable snippets or gadgets in victim software. Particularly insidious is the first Spectre variant exploiting conditional branch misprediction, since any victim code path guarded by a source, if statement may result in a gadget, if statement may result in a gadget To identify practical Spectre version 1 gadgets.

Previous research has focused on speculative memory safety vulnerabilities, use after free and type confusion. However, much less attention has been devoted to other classes of normally architectural software bugs, such as concurrency bugs. To avoid or at least reduce concurrency bugs, modern operating systems allow threads to safely access shared memory by means of synchronization primitives such as mutexes and spinlocks. In the absence of such primitives, for example due to a software bug, critical regions would not be properly guarded to enforce mutual exclusion and race conditions would arise. While much prior work has focused on characterizing and facilitating the architectural exploitation of race conditions, very little is known about their prevalence on transiently executed code paths. To shed light on the matter in this paper we ask the following research questions First, how do synchronization primitives behave during speculative execution? And second, what are the security implications for modern operating systems? To answer these questions, we analyzed the implementation of common synchronization primitives in the Linux kernel. Our key finding is that all the common primitives lack explicit serialization and guard the critical region with a conditional branch. As a result, in an adversarial speculative execution environment, ie with a specter attacker mistraining the conditional branch, these primitives essentially behave like a NOP, essentially behave like a no-op. The security implications are significant, as an attacker can speculatively execute all the critical regions in victim software with no synchronization.

Building on these findings, we present Ghost Race, the first systematic analysis of speculative race conditions, a new class of speculative execution vulnerabilities affecting all common synchronization primitives. Srcs are pervasive, in turn arbitrary, architecturally race-free code into race conditions exploitable on a speculative path, in fact one originating from the synchronization primitives conditional branch itself. While the effects of SRCs are not visible at the architectural level for example no crashes or deadlocks Due to the transient nature of speculative execution, a specter attacker can still observe their micro architectural effects via side channels. As a result, any SRC breaking security invariance can ultimately lead to specter gadgets disclosing victim data to the attacker. Okay, so essentially, the Spectre of Spectre strikes again.

Another significant exploitation of speculative execution has arisen. And need we echo once again Bruce Schneier's famous observation about attacks never getting worse and only ever getting better? Under the paper's mitigation section they write briefly to mitigate the speculative race condition class of vulnerabilities, we implement and evaluate the simplest, most robust and generic one, introducing a serializing instruction into every affected synchronization primitive before it grants access to the guarded critical region. Thus terminating the speculative path provides a baseline to evaluate any future mitigations and, as mentioned, mitigates not only the speculative condition used after free vulnerabilities presented in the paper, but all other potential speculative race condition vulnerabilities. Okay, so essentially what they're saying is that, as with all anti-spectre mitigations anti-spectre mitigations the solution is to deliberately shut down speculation at the spot where it's causing trouble.

In this instance, this requires the insertion of an additional so-called serializing instruction into the code at the point just after the synchronization instruction and before the result of the synchronization instruction is used to inform a branch instruction. This prevents any speculative execution down either path following the branch instruction. The problem with doing that is that speculation exists specifically because it's such a tremendous performance booster and that means that even just pausing speculation will hurt performance. To determine how much this would hurt, for example, linux's performance, these guys tweaked Linux's source code to do exactly that to prevent the processor from speculatively executing down both paths following a synchronization primitives conditional branch. What they found was that the effect can be significant. It introduces a 10.82% overhead when forking a Linux process, 7.53% overhead deleting an empty 0K file and 11.37% when deleting a 10K file. Those are particularly bad, but overall, as they wrote in their abstract they measured a 5.13% performance cost overall from their mitigation. Now, 5.13%, that's huge and it suggests just how dependent upon synchronization primitives today's modern operating systems have become. For a small change in one very specific piece of code to have that much impact means it's being used all the time.