Security Now 998 transcript

Please be advised this transcript is AI-generated and may not be word for word. Time codes refer to the approximate times in the ad-supported version of the show

0:00:00 - Steve Gibson

This week on Security Now, apple wants to shorten the life of your SSL certificate. Steve's up at arms about that. We'll talk about a very nice new messenger program that Steve says may even be better than Signal, and then we'll take a look at IPv6, whatever happened to it? It looks like it's going to be another 20 years. Steve explains why. That's okay, it's all coming up. Next on Security Now Podcasts you love.

0:00:29 - Leo Laporte

From people you trust. This is Twit.

0:00:37 - Steve Gibson

This is Security Now with Steve Gibson. Episode 998, recorded Tuesday, October 29th 2024. The endless journey to IPv6. Episode 998, recorded Tuesday, October 29th 2024. The endless journey to IPv6. It's time for security now. The show. We cover the latest security and privacy news, keep you up to date on the latest hacks, and we get a little sci-fi and health in there as well, because Mr Steve Gibson is what we we call a polymath. He is fascinated by it all.

0:01:09 - Leo Laporte

Hi, steve hello my friend uh speaking of science fiction.

It's not on the show notes but I am at 40 percent into exodus, hamilton's exodus, and I have to say I'm glad it's long because it's come together. There are so many things. I mean I could talk to John Salina, I guess, because I know he's on his— yeah, he's probably finished it by now. He reads— no, he's in his reread already. Oh, wow, he shot through it and he's reading it again and he said, the second time through, he knows who the people are. So because I mean it's you know Hamilton doesn't write thin sci-fi, but there are so many really interesting concepts like and this isn't, there's no spoilers here Faster than light travel is never invented, so we never have FTL, but what we have is well, okay.

Also, this is set like 50,000 years in the future. There are the so-called remnant wars that have left behind like dead planets and derelict, like hugely up armored, like technologies, and, and we we've gone so far in the future that we've lost some of the knowledge that was there, like during the, the, the peak wartime. So they're finding this stuff that they don't understand, but they kind of give it power and see what it does. But the other thing that's cool is that there are this one elevated, old, old race created what's known as the Gates of Heaven old old race created what's known as the gates of heaven, and they're like, they're gates which draw on a huge source of energy to bring their ships up to 0.9999 light speed, I mean like right up to sea, but not quite, because you can't actually. You know it takes infinite energy to get all the way there. But what it does is it of course, creates huge relativistic time compression so that the people who are traveling at 0.99999 of C, they, you know, it was a one week trip for them Meanwhile four generations are gone.

So anyway, so, but again, it's just. This is a whole nother. He did it again. There's a whole nother rich tapestry of really good hard sci-fi, you know, hamilton style, and I, as I said, I'm at 40, I have no idea, you can't even begin to guess what's going to happen. I have no idea what's going to happen, but as I, I do not want it to end because it's just, it's just really solid entertainment whoops, I have pushed my mute button.

0:04:26 - Steve Gibson

It's like a locomotive, I think. Where it starts slow, maybe the first couple of pages, the wheels spin a little bit. First couple, first third of the book yeah, it was it.

0:04:38 - Leo Laporte

You have to just sort of hold your breath and you know you do want to read the early history because because he like summarizes- the history in a preface.

0:04:47 - Steve Gibson

I listened to that and then it got to the Dramatis Personae and now I'm listening. Yes, it went on for a while.

0:04:53 - Leo Laporte

Oh my God. And the problem is you don't have any. There's no reference point, everybody is defined in their relationships to each other.

0:05:02 - Steve Gibson

So that's the part where the wheels are spinning, but the train is not moving forward much. But I just got past that, so I'm looking forward to that. Oh, let me tell you.

0:05:13 - Leo Laporte

I mean, since I don't listen, I read, I can't relate to that experience, but where we are is really good, I might get the book version of this one. It is just, and again, I wasn't going to start until the number two was ready, right. But when John said, yeah, I already finished it and I'm reading it again, I thought, okay, I'm going to.

0:05:39 - Steve Gibson

We're talking about for those just joining us Peter F Hamilton, one of our favorite sci-fi authors.

0:05:44 - Leo Laporte

He writes this beautiful, the Archimedes, engineedes engine yeah, and it's called. Exodus is the first, is the first of two is duology, yeah, and oh, wow, okay, so we've got a great episode. Um apple has, who's a member of the CA Browser Forum, has proposed that over time we bring maximum certificate life down to 45 days, to which I say please no.

Yeah, don't do it. That's pretty quick. Four companies for downplaying the severity of the consequences of the solar winds attack on them, which is interesting. Google has added five new features, or will be. A couple are in beta to Messenger, the Google Messenger app, including inappropriate content warnings, which is interesting, because of course, apple did that a few years ago. Content warnings, which is interesting because of course, apple did that a few years ago. And this brought me to an interesting question, and that is whether AI-driven local device side filtering could be the resolution to the encryption dilemma forever. That is, solve the end-to-end encryption problem. Anyway, we'll talk about that. That is, solve the end-to-end encryption problem. Anyway, we'll talk about that. Also, I tripped over as a consequence of some news of them relocating something I'd never been aware of before, a very nice-looking messenger app called Session, which is what you get if you were to marry Signal and Onion Routing from Tor. It's very interesting. I imagine our listeners are going to be jumping on this Also. Just a quick look at the EU software liability moves.

There are a couple other people produced some commentary that we did. We talked about that last week. We've got fake North Korean employees actually found to have been installing malware in some blockchain stuff. Also, answering a listener's question about whether he needed Spinrite to speed up an SSD no, you don't, I'll touch on that. Also, using ChatGPT to review and suggest improvements of code Another thought from a listener.

And then I want to spend some time looking at the Internet's governance that has been trying to move the Internet to IPv6 for, yes, lo, the past 25 years. Yeah, but the Internet just doesn't want to go. Why not? Will it ever? What's happened? The Asia-Pacific Network Registry has a really interesting take on the way the Internet has evolved such that well. First of all, from some technology standpoints, we don't actually have the problem anymore that they were worried about needing IPv6 to solve, and why. Getting to places is no longer about addresses, it's about names. So, oh, and to cap all this off, as I said, this is a great podcast, but this picture of the week OMG it is. I just gave it the caption. There really are no words, but this is one. It's a little cerebral. You got to look at it for a minute and just think, oh, what the hell?

0:09:39 - Steve Gibson

I haven't looked at it yet. It's on my screen, it's ready to be scrolled up and into view. We will do that together in moments. Uh and uh. I can't wait. That's always fun, uh, by the way, we should mention if you want to get the show notes, easiest thing to do is go to steve's site, grccom. Go to the podcast page and every podcast has a very nice pdf of show notes. Include that image. Or go to GRCcom, slash email and sign up for his newsletter. That way you can get it automatically.

0:10:12 - Leo Laporte

And Leo, I don't know if you looked at the time stamp on the email that you got, it was yesterday afternoon.

0:10:21 - Steve Gibson

So Steve's wife is making him do this, I'm convinced. Does Laurie say you got to get this done so we can go out to dinner or something?

0:10:31 - Leo Laporte

So by Sunday late morning I had finished the project I had been working on, which is the amalgamation of the e-commerce system and the new emailing system. I didn't have them communicating yet, and they had to so that we didn't have the databases desynchronized, and so I thought, okay, I'm going to start working on the podcast. And, as it turns out, it went well. There was lots of material, and by yesterday afternoon, monday afternoon, I was done, and so I thought I'm just going to give.

0:11:06 - Steve Gibson

Actually it was 11,717 subscribers got it a day ahead, and Steve's been doing that more and more lately, so it's worth subscribing yeah, you do At the early edition.

0:11:19 - Leo Laporte

Well, and in fact I'm not going to step on it, but one of our listeners mentioned a benefit of that that hadn't occurred to me before. So anyway we got a great podcast and get ready for the picture of the week after you tell us who's supporting this fine work.

0:11:36 - Steve Gibson

Sponsor for this first segment of Security Now, and the name you know well. They've been with us for many years now the Thinkst Canary. It's a honeypot. It's kind of appropriate because one of our very first shows was about honeypots and we've interviewed Bill Cheswick, who created the first honeypot. In fact we did a panel with him in Boston a couple of years ago. He wrote the first honeypot and it was a big, massive effort.

A honeypot is a system that looks like it's valuable but really is just an attractant to hackers and when they go to the honeypot they either get trapped or you get notification or something happens. Well, you don't have to be an expert anymore to deploy very, very good honeypots from Thinks Canary. These are honeypots from thinks canary. These are honeypots that can be deployed in minutes. They can. They can impersonate anything from an ssh server to a windows server, the iis. They can be a skater device. Mine's a synology nas and they really go the extra mile to make these impersonations very realistic. They look identical to the real thing. My NAS has a Synology Mac address. You know the first few digits are correct for Synology. So you know a Wiley hacker looking at this isn't going to say, oh yeah, I could tell that's a phony. They don't look vulnerable, they look valuable.

You can also create lure files with your things canary little PDFs or Excel or doc Xs or whatever you know. We have some Excel spreadsheets that say things like payroll information, employee addresses I mean, we're not as blatant as saying social security numbers, but you could. The thing is these aren't real. If a bad guy, somebody accessing inside your network, accesses those lure files or tries to attack your fake internal SSH server, you're going to get notifications and they will tell you immediately you have a problem. Only real, positive alerts, no false alerts. And you get them any way. You want email text. They support webhooks, there's an API, syslog, whatever works for you, but it's great because it's a way of knowing that somebody's inside your network.

I'm sure you have excellent premarital defenses we all do, I do here but if somebody penetrates or maybe you've got a malicious insider in your company looking around, how would you know that they're there? That's the ThinkScanary. Just choose a profile for your ThinkScanary device, register it with a hosted console for monitoring and notifications and then you just sit back and relax. Attackers who breach your network or malicious insiders or other adversaries. They can't help but make themselves known. They say, oh, I got to get in that and immediately you'll get a notification, just the notifications that matter.

This is a vital tool for your security. On average, companies don't know that they've been breached for 91 days. You want to know the minute somebody's in there. That's why you need this incredible honeypot that thinks it's Canary. Go to canarytoolstwit C-A-N-A-R-Ytoolstwit.

You know big companies might have hundreds of them spread out all over the globe. In fact, canaries are in operation on all seven continents, so that tells you something. Are in operation on all seven continents, so that tells you something. A smaller company like ours might just have a handful. Let's say you need five. $7,500 a year, you get five ThinkScanaries. You get your own hosted console, all the upgrades, all the support, all the maintenance is included. And, by the way, if you use the offer code TWIT, t-w-i-t in the how did you hear about us box that's not a commentary on you, by the way, that's the name of our network, okay, twit. Realize, people watching security now may not know, but put TWIT in there and you'll get 10% off the price for life.

Now here's another thing that will reassure you If you're going. Well, I don't know, do these things really work as they say they do and Work as they say they do, and I can vouch for them, but you can always return your Thinks Canaries. They have a two-month, 60-day money-back guarantee for a full refund, every penny. I have to point out, though, that during all the years Twit has partnered with Thinks Canary, all the years we've been doing these ads, that refund guarantee has not yet been claimed. Not once, never. Because once people get this and once they have it, they go. Oh, yeah, yeah, you have to have this. Visit canarytools. Slash twit. Enter the code twit and how'd you hear about us box? We thank thanks canary for supporting this show and all their users with excellent security tool that's everybody should have.

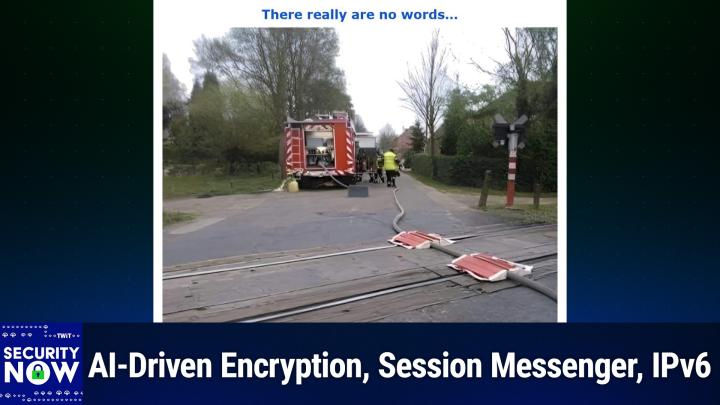

Now I going to. How should I do this? Scroll up First, before I show everybody else. I'm going to scroll up. Consider what you see, and the caption is. There really are no words. I see a fire truck. I see a fire hose. Oh, dear, okay, okay, that's good. I'm showing you the picture now.

0:16:52 - Leo Laporte

Everybody, um steve you want to describe it for our audio listeners. So if you, oh if, if you, if you had, if you were a fire company and you needed to have a hose go across an area where cars would be driving over it, you might, and we've seen this like with electrical cords that have to go across the floor. You put up a protector around it, kind of like a little ramp up and down on either side so that you can roll over it. You won't get stuck on it, you won't squash it, you'll protect it.

So we have that scenario here in today's picture of the week for Security, now number 998, where a fire hose is being protected with similar sort of little kind of ramps. The problem is they're not being protected from a car's tires rolling over the hose. They're being protected from a car's tires rolling over the hose. They're being protected from a train. This is crossing a train track, and anybody you know who's thought much about the way trains work, you know they have wheels that have flanges on the inside, which are the things that keep the wheels on the track, and so the last thing you want to do is do anything to force those wheels up out of the grooves.

0:18:44 - Steve Gibson

I think in all likelihood, a train would just go right over the top of that and cut it in half. I think in all likelihood a train would just go right over the top of that and cut it in half.

0:18:49 - Leo Laporte

I don't know.

0:18:52 - Steve Gibson

Oh, you mean slice it, slice it, right. Yeah, because those wheels are sharp.

0:18:56 - Leo Laporte

I hope they're sharp, because you'd much rather have your hose cut than to have the train derailed, which is the alternative scenario here You'd have another emergency to attend to very quickly. It is.

0:19:10 - Steve Gibson

Unbelievable, unbelievable.

0:19:14 - Leo Laporte

To me this looks like maybe like England, I don't know why. I kind of have an English feeling to it, but you know, like we don't have, it looks like there is a crossing gate and the light is over. Oh, I know why. It's aimed away from us and it's on the right side.

0:19:31 - Steve Gibson

Yeah, yeah, yeah, it would be on the left side if you were driving on the left side of the road.

0:19:36 - Leo Laporte

Yeah, so, and it looks like. Do you see water coming out of the back left?

0:19:41 - Steve Gibson

of the. I see that.

0:19:42 - Leo Laporte

Yeah, what is that Of the fire engine? Out of the back left I see that. Yeah, what? What is the fire engine? Yeah, we don't know what is going on, but it's not good if any train is gonna be coming down the tracks.

0:19:52 - Steve Gibson

Oh goodness, holy moly. Anyway, one of our better pictures, I think. It's just say it with me. What could possibly?

0:19:59 - Leo Laporte

go wrong. Actually, that would have been a much better caption. That would have been a perfect caption for this picture. Okay, so, as our longtime listeners know, many years ago we spent many podcasts looking at the fiasco that was and sadly still is certificate revocation still is certificate revocation, noting that the system we had in place using CRLs certificate revocation lists was totally broken. And I put GRC's revokedgrccom server online specifically for the purpose of vividly demonstrating the lie. We were being told that it just doesn't work Now.

At the time, the OCSP solution Online Certificate Status Protocol seemed to be the best idea. But if users' browsers queried for OCSP status like real you know, and the idea was that you could do it online the browser could ask the CA is this certificate I've just received from the web server still good? The problem was it created both performance problems because of this extra need to do a query, and privacy issues, because the CA would know everywhere that users were going, based on their queries, back to the CA's servers. So the solution to that was OCSB stapling, where the website's own server would make the OCSB query you know. Thus no privacy concern there. And then, as the term was staple, meaning you know, in some means, you know, electronically attach these fresh OCSP results to the certificate that it was serving to the Web browser, so the Web browser wouldn't have to go out and make a second request. Great solution, solution. But it seems that asking every web server in the world to do that was too high a bar to reach because while some did, mine was, most weren't so. Despite its promise and partial success, the CA browser form, which sets the industry's standards, recently decided and we covered this a few, I guess about a month ago to backtrack and return to the previous, the earlier use and formal endorsement of the earlier certificate revocation list system, which would move all of the website certificate checking to the browser. This had the benefit of allowing us to offer a terrific podcast explaining the technology of Bloom filters, which everyone enjoyed, and that technology very cleverly allows the user's browser to locally and very quickly determine the revocation status of any incoming certificates.

Okay, so that's where we are Now. When you think about it, for a certificate to be valid, two things must be true. First of all, we must be between the certificates not valid before and not valid after dates, and you know, there must be no other indication that this thus otherwise valid certificate has nevertheless been revoked for some reason, doesn't matter why it's no longer good. So the conjunction of these two requirements means that the certificate revocation lists which are the things that will tell us if be safely removed from the next update to the industry's Bloom filter-based CRLs down. Shortening the lives of certificates is the way to do that. Or, if you know, if this still doesn't come to pass, because we, you know, we've been at this for quite a while and we've never gotten any form of revocation that actually works. So maybe just shortening is a good thing in general. And this brings us to last week's news of a proposal by Apple, who is a month all the way down to just 45 days.

If this proposal were to be adopted, certificates would have their lives reduced in four steps, starting one year from now and ending in April of 2027. It would go this way we're currently at 398 days. That comfortable 398 days would be cut nearly in half to 200 days one year from now, in September of 2025. Then a year actually a month ago, right, because we're ending October here in a second. Anyway, then a year later, in September 2026, it would be reduced by half again, from 200 to 100 days, and the final reduction would occur seven months later, in April of 2027, which would see web servers certificate maximum lifespans reduced to just 45 days.

Okay now, the only reason let's Encrypt's 90-day certificate lifetimes are workable is through automation right are workable is through automation right. So Apple must be assuming that, by setting a clear schedule on the plan to decrease certificate life spans, anyone who has not yet fully automated their server's certificate issuance with the ACME protocol, which is the standard that the industry has adopted for allowing a web server to automatically request a new certificate, anyone who hasn't already done that, will be motivated to do so, because the end is coming. Who wants to manually update their certificates short of 45 days? Nobody. So the problem is this creates some potential edge case problems, since it's not only web servers that depend upon TLS certificates.

For example, I have one of personal interest that comes to mind. Grc, as we know, signs its outbound email using a mail server that's manually configured to use the same certificate files that are valid for the GRCcom domain. That's what you have to do to get DKIM working, and then DKIM and SPF, as we talked about recently, together, allows you to obtain DMARC certification, and then the world believes that email coming from GRC actually did because it's signed. Well, it's signed with the same certificate that DigiCert created for me for GRC's web server, because it's from the GRCcom domain. At the moment I only need to update the email's copy of those certificates annually, so it's manageable to do that through the email server's UI, which is the mechanism it provides for that.

I don't know what would happen if I were to change the content of the files out from under the email server without it knowing, using Acme style updates. For all I know, it has private copies of the certificates which it might be holding open. You know, holding the files open to improve their speed of access, which would prevent them from being changed. No programmatic way to inform the email server that it needs to change its certs, since this has never been a problem or a necessity until now. Remember once upon a time it was three years and way back it was 10 years that we had certificates life. So it happens that I'm able to write code, so I could see that I might wind up having to add a new custom service to watch for my web server autonomously changing its certificates, then shut down the email server, update its copies of the certs and restart it. My point is, you know, that's what's known as a royal effing kludge, and it is no way to run the world. And make no mistake, my email server is just a trivial example of the much larger problem on the horizon.

Me aware or non-ACME capable, non-web server appliances we have today that have proliferated in the past decade and which now also need certificates of their own. What do they do? So, you know, perhaps this is the price we pay for progress, but I question, you know, this sort of brought to mind, I question why this should be imposed upon us and upon me. It's my certificate. It represents my domain of GRCcom, my domain of GRCcom. Why is it not also my choice how long that representation should be allowed to endure? Okay, if I'm some big organization like Amazoncom, Bank of America, paypal, where a great deal of damage could be done if a certificate got loose, I can see the problem. Damage could be done if a certificate got loose. I can see the problem. So such organizations ought to be given the option to shorten their certificates lives in the interest of their own security, and in fact they can do that. Today, when I'm creating certificates at DigiCert, I'm prompted for the certificate's duration. 398 days is the current maximum lifetime allowed, but there's no minimum, and DigiCert supports the ACME protocol, so automation for short-lived certificates is available from them.

But why are short-lived certificates going to be imposed upon websites by the CA Browser Forum and the industry's web browsers? And let's get real here as we know, revocation has never worked Never. It's always been a feel-good fantasy, and the world didn't end when we only needed to reissue certificates once every three years with no effective ability to revoke them. Now the industry wants to radically reduce that to every six weeks. How are we not trying to solve a problem that doesn't actually exist while at the same time creating a whole new range of new problems we've never had before?

I'll bet there are myriad other instances, such as with my email server, where super short-lived certificates will not be practical. This sure seems like a mess being created without full consideration of its implications. Do these folks at the CA Browser Forum fail to appreciate that web servers are no longer the only things that require TLS connections and the certificates that authenticate them and provide their privacy? And many of these devices that need certificates for a domain may not be able to run the ACME protocol because they are DV domain validation certs. I dropped my use of EV certificates because that became wasted money once browsers no longer awarded those websites using EV certificates with any special UI treatment. You didn't get a little green glow up there in the UI bar, but I've continued using OV.

Those are organization validation certificates, since they're one notch up from the lowest form of certificate, the domain validation DV cert, which let's Encrypt uses, because that's all it's doing.

It's just validating. Yes, you're in control of that domain, but if we're all forced to automate certificate issuance, I can't see any reason then why everyone won't be pushed down to that lowest common denominator of domain validation certificates, the issuance of which let's Encrypt has successfully automated. At that point, certificates all become free and today's certificate authorities lose a huge chunk of their recurring business. How is that good for them? And the fact is simple domain validation provides a lesser level of assurance than organization validation. So how is forcing everyone down to that lowest common denominator good for the overall security of the world? I suppose that Apple, with their entirely closed ecosystem, may see some advantage to this. So fine, they're welcome to have whatever super short-lived certificates they want for their own domains. But more than anything, I'm left wondering why the lifetime of my own domain in all of its various applications web, email and so forth, why that's not my business and why that's not being left up to me.

0:35:19 - Steve Gibson

So if it were Google saying this, I might worry, because Google has this power to enforce its FACATCA plans all the time, but it's Apple who cares. Is there any chance that this is going to become the?

0:35:34 - Leo Laporte

rule yes, remember that, apple. When we went to 398 days, they said they would dishonor any certificate that had a longer life. Because, they have Safari right. Well, exactly All of their iDevices. The certificate has both a not valid before and a not valid after, so if those two dates are further than 398 days apart, apple just says sorry this is an invalid certificate.

0:36:06 - Steve Gibson

They wouldn't unilaterally impose a 45-day limit. They would want the CA browser forum to agree, right?

0:36:13 - Leo Laporte

Yes, and so that's. What's happened is that there's a thread discussing this which is suggesting this timeline for bringing it down to 45 days, and I do not see the logic in that. I see huge downside consequences.

0:36:31 - Steve Gibson

A lot of downside.

0:36:32 - Leo Laporte

And why is it any of their business how long I want my certificate to assert GRCcom for I will take responsibility for that. It's in an HSM. It is safe. It is safe. It cannot be stolen. And revocation maybe it's going to be coming back with bloom filters. We hope so. If the worst happened, we could still revoke. Like I won't be able to purchase a trusted certificate from a CA longer than 45 days. That's not a good place.

0:37:11 - Steve Gibson

What is the rationale? Why does Apple want to make it so short?

0:37:14 - Leo Laporte

It can only be so that you are constantly having to reassert your control over the domain and the certificate.

0:37:25 - Steve Gibson

And so for the health of the internet then, yes, and do you see a big problem there?

0:37:30 - Leo Laporte

There's no big problem there. They used to be for three years and everything was just fine. We're still here, right, and we never had revocation. That worked, it wasn't a problem.

0:37:42 - Steve Gibson

So they're solving a problem that doesn't exist with a solution that causes many more problems.

0:37:47 - Leo Laporte

I I think, I think they're going to end up. People are just going to say no. I hope so, because we've just come down from three years to one year. And you know, and, and at the time I said, the only good thing I could see about this, leo, is that every three years I'd forgotten how to do it.

0:38:07 - Steve Gibson

You know there was there was so much time in between.

0:38:10 - Leo Laporte

It was like, you know, I have to, I have to run this through ssl.

I mean uh, oh yeah, that's when you had to do it with a certain weird command line to get a pfx format and blah, blah and every three years, I forgotten now every year it's like, oh yeah, okay, I gotta do this again, but so it has the advantage of oh, and you know, of course, how many times have we seen websites where they like whoops, our certificate expired because it was, you know that, three years ago. You know, paul worked here. Well, paul's no longer here and he was the guy who did the certificates.

0:38:44 - Steve Gibson

Right, but we still see that let's Encrypt has had real success with the scripted re-upping.

0:38:51 - Leo Laporte

Oh my, God, they've taken over the TLS market.

0:38:53 - Steve Gibson

Right.

0:38:54 - Leo Laporte

It's like three-quarters or two-thirds of all certificates because nobody wants to pay. It's like wait, I can get it for free.

0:39:03 - Steve Gibson

It's free and the script runs automatically. You don't even have to think about it and you don't have to worry about Paul anymore, because you just re-up every what is it. 90 days.

0:39:13 - Leo Laporte

It's 90 days for let's.

0:39:15 - Steve Gibson

Encrypt. I don't see any reason, though, to make it half that. That's crazy.

0:39:19 - Leo Laporte

It is, I don't see it either, and I don't see it either. And remember, you can still get a certificate Even if you're using let's Encrypt for your web browsers. You could still buy a one-year cert for other things, and so all the appliances that we have that want to do TLS connections. You can still purchase a longer-lived certificate, and when I was thinking this through, one possibility would be to allow non-web certs to have a longer life, because in every certificate it does state what the uses of the cert are, so automatable reissuance could have a shorter life, but then it doesn't solve the problem, because if what you're worried about is the certificate being stolen, apparently they're they're worried about anything with longer than 45 days being anywhere. It's like I, I, just I, I do not understand it. Just and again, why is it their business? This is, we had three years. We had no problems, except, you know, paul leaving the company.

0:40:37 - Steve Gibson

Did Paul leave the company?

0:40:41 - Leo Laporte

And we're half an hour in. Leo, let's take a break, and then we'll talk about the sec levying fines for against four companies who lied.

0:40:50 - Steve Gibson

oh, shame on them. You don't want to do that to the. How dare they, those lying liars? Our show today brought to you by experts exchange, where the truth reigns. Hey, remember I did when that? When I met, I said I used to use you guys all the time. Well, we're still here, they said, and we want more people to know about it, especially now when so much of the information on the internet is crap and so much of it is AI generated.

When you have a question about technology, wouldn't it be nice to be in a network of trustworthy, talented tech professionals who can answer your questions, give you advice and industry insights, people actually using the products in your stack, instead of paying for an expensive and, honestly, sometimes not so good enterprise level tech support? That's what Experts Exchange is all about. Is the tech community for people tired of the AI sell's. What Experts Exchange is all about is the tech community for people tired of the AI sellout. Experts Exchange is ready to help carry the fight for the future of human intelligence, because there's a bunch of intelligent humans, experts, if you will on Experts Exchange. They give you access to professionals in over 400 different fields. I'm talking coding, microsoft, aws, devops, cisco, on and on and on. And, unlike maybe those other sites that you say, well, I can ask questions on other sites I won't name names because I'm going to slime them Unlike those other sites, there's no snark. You know you go to those other sites. You ask a question, half the time they're going to say duplicate question. Next, another rest of the time they're saying, well, I wouldn't do it that way, here's how I would do it. Or you're a dummy or insult you, right? Not at Experts Exchange. Duplicate questions are encouraged. There are no dumb questions.

The contributors in Exper experts exchange love tech and they understand that the real reward of their expertise, of knowing deeply knowing how a Cisco router works, for instance, the reward of that is is being able to show your expertise, to pass it on, not to snark at somebody but to say, hey, let me help you to pay it forward. They love graciously answering your questions. One member said I never had GPT stop and ask me a question before. But that happens on EE. That's what its friends call it EE all the time. Experts Exchange is proudly committed to fostering a community where human collaboration is fundamental. Humans, they're ex. I love it. I love it. I mean, really that's, that's who you want to answer your questions.

Their expert directory is full of experts to help you find what you need. Steve, you'll be glad to know that rodney barnhart is a regular security. Now listener is there. He's a VMware V expert. Edward Von Billion, who's a Microsoft MVP and an ethical hacker I was trying to get the pronunciation of Edward's last name Went to his. He's got a lot of really great YouTube videos and, of course, he's on Experts Exchange and he always says this is Ed or this is Edward. He never says his last name. So Ed is there.

Cisco design professionals are there? Executive IT directors? That's actually another thing. It's not just technical information. You can get advice on how to run your company. You can get advice on how to handle employees, how to motivate. There are really experts there who love talking about what they do and helping you do it too. And here's something really important Other platforms most of the other platforms betray their contributors by selling their content to trained AI models.

Reddit does it, linkedin just started doing it. At Experts Exchange, your privacy is not for sale. They stand against the betrayal of contributors worldwide. They have never and will never sell your data, your content or your likeness. They block and strictly prohibit AI companies from scraping content from their site for training their LLMs. The moderators strictly forbid the direct use of LLM content in their threads.

Experts deserve a place where they can confidently share their knowledge without worrying about a corporation stealing it. In effect, to increase shareholder value, and humanity deserves a safe haven from AI, and you deserve real answers to your legitimate questions from true experts. That's what you get at Experts Exchange. Now they know you know there are people like me who haven't tried it in a while. They know that maybe you've never even heard of it. So they're going to give you 90 days free. No, you don't even need to give them a credit card Three months to try it to see if it's what you're looking for. I think it is 90 days free when you go to e-ecom slash twit. That's e-ecom slash twit. You know they've been around for a while. They got a three-letter com domain. Those are rarer than hen's teeth. Visit eecom to learn more. Experts Exchange. Thank you, experts Exchange, for supporting our local expert here, mr Steve Gibson, and thanks to you for using that address. I know you saw it on security.

0:45:59 - Leo Laporte

Now Experts Exchange e-ecom slash twit on we go, mr g, mr g. So one of the rules of the road is that companies that are owned by the public through publicly traded stock have a fiduciary duty to tell the truth to their stockholders.

Oh wouldn't that be nice yes when something occurs that could meaningfully affect the company's value. Yes, for example. For example, on December 14, 2020, the day after the Washington Post reported that multiple government agencies had been breached through SolarWinds Orion software, the company itself, solarwinds, stated in an SEC filing that fewer than 18,000 of its 33,000 Orion customers were affected. Still, 18,000 customers affected made it a monumental breach and there was plenty of fault to be found in SolarWinds' previous and subsequent behavior. But they fessed up. They said, ok, this is what happened, but I didn't bring this up to talk about them. I wanted to share some interesting reporting by CyberScoop, whose headline a week ago was SEC hits four companies with fines for misleading disclosures about SolarWinds hack, in other words, for misleading the public about the impact their use of SolarWinds Orion software had on their businesses and how it might affect their shareholders' value. Cyberscoop's subhead was Unisys. Avaya, checkpoint and Mimecast will pay fines to settle charges that they downplayed in SEC filings. The extent of the compromise this is the point that I wanted to make. The management of companies owned by the public need to tell the truth. So let's take a closer look at this.

Cyberscoop wrote the Securities and Exchange Commission. You know SEC said it has reached a settlement with four companies for making data as theoretical, despite knowing that substantial amounts of information had been stolen, you know. In other words, they outright lied to their shareholders, cyberscoop said. The acting director of the SEC's Division of Enforcement said in a statement. As today's enforcement actions reflect, while public companies may become targets of cyber attacks, it's incumbent upon them to not further victimize their shareholders or other members of the investing public by providing misleading disclosures about the cybersecurity incidents they've encountered here. The SEC's orders find that these companies provided misleading disclosures about the incidents at issue, leaving investors in the dark about the true scope of those incidents. As part of the settlement agreement reached, the companies have agreed to pay fines with no admission of wrongdoing. Ok, so first, in the first place, they're not having to say we lied. Ok, so there's that. But then I was unimpressed, frankly, by the amounts. Unisys will pay $4 million, avaya $1 million, checkpoint $995,000, and Mimecast $990,000.

According to the SEC, by December 2020, avaya, for example, already knew that at least one cloud server holding customer data and another server for their lab network had both been breached by hackers working for the Russian government alerted the company that its cloud email and file sharing systems had also been breached, likely by the same group and through means other than the SolarWinds Orion software. A follow-up investigation identified more than 145 shared files accessed by the threat actor, along with evidence that the Russian group known as APT29, aka Cozy Bear, monitored the emails of the company's cybersecurity incident responders. So they were deeply penetrated and they knew it Despite this, in a February 2021 quarterly report. A couple months later, in a February 2021 quarterly report a couple of months later, avaya described the impact in far more muted terms, saying the evidence showed the threat actors accessed only quote a limited number of company email messages. Ok, that's a little gray. And there was quote no current evidence of unauthorized access in our other internal systems. Ok, so you could call into question the word current Right? They knew that those representations were flatly false, uncovered that, following the disclosure of a device running Orion multiple systems, seven network and 34 cloud based accounts, including some with admin privileges, were accessed over the course of 16 months. The threat actors also repeatedly connected to their network and transferred more than 33 gig of data. Work and transferred more than 33 gig of data. But the SEC's cease and desist order stated that Unisys had quote inaccurately described the existence of successful intrusions and the risk of unauthorized access to data and information in hypothetical terms, despite knowing that intrusions had actually happened and in fact involved unauthorized access and exfiltration of confidential and or proprietary information unquote. The company also appeared to have no formal procedures in place for identifying and communicating high-risk breaches to executive leadership for disclosure. Anyway, and there are similar instances at Checkpoint and Mimecast.

The problem here is I'd like to be able to draw a clear moral to this story, as it sort of started out seeming. But given the extremely modest size of these settlements relative to each company's revenue, it's not at all clear to me that the moral of our story here is that they should have divulged more During the heat of the moment. The short-term impact upon their stock price may have been more severe than these fines and coming four years after the event, it's reduced to a shrug. So I doubt that this outcome will wind up teaching any other companies any important lessons, and any companies that did the right thing at the time and were then punished by their stockholders for telling the truth might actually take away the opposite lesson. Let's just lie, sweep it all under the rug for now, and then if three or four years later, you know, after we're hit with a modest tax for having done that, you know the world will have moved on anyway. We'll happily pay it and we'll have lost less money than if we had told the truth right up front.

0:54:09 - Steve Gibson

I mean that's the takeaway from this. It's the cost of doing business. These companies always say Yep, yep. It's the cost of lying to our stockholders, wow.

0:54:23 - Leo Laporte

Okay, best of lying to our stockholders, wow, okay. So last Tuesday, google's security blog posted the news of five new protections being added to their Google Messages app. Although Google's postings are often a bit, you know, too full of marketing hype for my own taste, I thought this one would be worth sharing. And it's not too long, so Google sharing. And it's not too long, so Google wrote. And here's the marketing intro Every day, over a billion people use Google Messages to communicate.

That's why we've made security a top priority, building in powerful on-device AI-powered filters and advanced security that protects users from 2 billion suspicious messages a month. With end-to-end encrypted RCS conversations, you can communicate privately with other Google Messages RCS users, and we're not stopping there. We're committed to constantly developing new controls and features to make your conversations on Google messages even more secure and private. As part of Cybersecurity Awareness Month they're getting it in just before Halloween, when it ends we're sharing five new protections to help keep you safe when using Google messages on Android. Okay so we've got. Enhanced detection protects you from package delivery and job scams, and I'm going to skip the paragraph describing it, because we all know what it means. You know they're, they're looking at the, the messages coming in and they're going to do some filtering to recognize when this is basically spam and flag it, warn you whatever they have all.

Number two intelligent warnings alert you about potentially dangerous links. Same thing there. They're getting into your encrypted messaging using device side AI powered filters to deal with that. But this one's short, they said. In the past year we've been piloting more protections for Google Messages users when they receive text messages with potentially dangerous links. Again incoming text messages being examined, they said, in India, thailand, malaysia and Singapore. Google messages warns users when they get a link from unknown senders and blocks messages with links from suspicious senders. We're in the process of expanding this feature globally later this year. So then, there's not much of this year left. So that'll be coming soon to Google messages for everybody else. So that'll be coming soon to Google messages for everybody else. Controls to turn off messages from unknown international senders another benefit. But it was number four that most caught my attention.

Sensitive content warnings give you control over seeing and sending images that may contain nudity, they said at Google. We aim to provide users with a variety of ways to protect themselves against unwanted content while keeping them in control of their data. This is why we're introducing sensitive content warnings for Google messages. Sensitive content warnings is an optional feature that blurs images that may contain nudity before about to be sent or forwarded. It also provides a speed bump to remind users of the risks of sending nude imagery and preventing accidental shares. All of this happens on device to protect your privacy and keep end-to-end encrypted message content private to only sender and recipient. Sensitive content warnings does not allow Google access to the content of your images, nor does Google know that nudity may have been detected. This feature is opt-in for adults, managed via Android settings, and is opt-out for users under 18 years of age, in other words, on by default for kids. Sensitive content warnings will be rolled out to Android 9 plus devices, including Android Go devices with Google messages, in the coming months, so I'll get back to that in a second.

The last piece of the five was more confirmation about who you're messaging traditional standard style cryptography, where we knew what a public key was and verifying somebody's shared public key was useful. So that's the fifth thing. Ok, but I want to skip back, as I said, to that fourth new feature sensitive content warnings. Apple announced their sensitive content warnings in iOS 15, where the smartphone would detect probably sensitive content and warn its user before displaying it. Despite that potentially privacy invading feature, which has now been in place for several years, we're all still here, just like we are, without certificate revocation. The world did not end. Not only did it not end, you know, when smartphones began looking at what their users were doing, it didn't even slow down. So the idea of device side image recognition and detection has not proven to be a problem, and Google has clearly decided to follow, but I believe there may be a larger story here. I suspect that this will be the way the world ultimately resolves that thorny end-to-end encryption dilemma that we've been looking at for several years now.

As we know, apple initially really stepped in it by telling people that their phones would be preloaded with an exhaustive library of the world's most horrible known CSAM, you know, child sexual abuse material. No one wanted anything to do with having that crap on their phone, and even explaining that it would be fuzzy, matching hashes rather than actual images did nothing to mollify those who said uh, apple, thanks. Anyway, I'll go get an Android phone before I let you put anything like that on my iPhone. Apple received that message loud and clear and quickly dropped the effort. But then, right in the middle of the various European governments and especially the UK's very public struggles over this issue, facing serious pushback from every encrypted messaging vendor saying they would rather leave than compromise their users' security, ai suddenly emerges on the scene and pretty much blows everyone's mind with its capabilities and with what it means for the world's future.

If there's been any explicit mention of what AI might mean to highly effective local on-device filtering of personal messaging content, I've missed it, but the application seems utterly obvious and I think this solves the problem in a way that everyone can feel quite comfortable with. Everyone can feel quite comfortable with. The politicians get to tell their constituents that quote next generation AI will be watching everything their kids' smartphones send and receive and will be able to take whatever actions are necessary without ever needing to interfere with or break any of the protections provided by full, true end-to-end encryption Unquote. So everyone retains their privacy with full encryption, and the bad guys will soon learn that they're no longer able to use any off-the-shelf smartphones to send or receive that crap. It seems to me this really does put that particular intractable problem to rest, and just in the nick of time, I'll note one more thing about this by AI-based on-device filtering will eventually and probably excuse me inevitably be extended to encompass additional unlawful behavior.

We know that governments and their intelligence agencies have been credibly arguing that terrorists are using impenetrable encryption to organize their criminal activities.

So I would not be surprised if future AI-driven device-side detection were not further expanded to encompass more than just the protection of children. This, of course, raises the specter of Big Brother, you know, monitoring our behavior and profiling, which is creepy all by itself, and I'm not suggesting that's an entirely good thing, because it does create a slippery slope. But at least there we can apply some calibration and implement whatever policies we choose as a society and implement whatever policies we choose as a society. What is an entirely good thing is that those governments and their intelligence agencies who have been insisting that breaking encryption and monitoring their population is the only way to be safe will have had those arguments short-circuited by AI, will have had those arguments short-circuited by AI. Those arguments will finally be put to rest, with encryption having survived intact and arguably giving the intelligence agencies what they need. So, anyway, it hadn't occurred to me, leo, before now, but it seems to me that that's a powerful thing that AI on the device can do, and it really can and should satisfy everybody.

1:05:08 - Steve Gibson

Yeah, well, I'm glad Google's doing what they're doing. I mean, I think that seems like a sensible plan, yep, and, as you notice, opt in for adults and opt out for kids, which is exactly how it should be.

1:05:22 - Leo Laporte

Yep, agreed. Yeah, okay, I stumbled on a surprising app that I was never aware of. So, while we're on the subject of encrypted apps, an app known as Session and anybody who's listening live and wants to jump ahead getsessionorg is the URL. An app known as Session is a small but increasingly popular encrypted messaging app. Session announced that it would be moving its operations outside of Australia get this, leo.

After the country's federal law enforcement agency visited an employee's residence and asked them questions about the app and about a particular user of the app. As a result of that, nighttime intrusion session will henceforth be maintained by a neutral organization based in Switzerland. Good, good, yes, that's appalling, it is. It was like whoa, whoa, you know a knock on your door and there's, you know, australian's federal law enforcement saying you work for this sessions company, right, so we need some information about one of your users. Well, wait till you hear how impossible it is for them to answer that question.

404 Media noted that this move signals the increasing pressure on maintainers of encrypted messaging apps, both when it comes to government seeking more data on app users, as well as targeting messaging app companies themselves. They cited the recent arrest of Telegram's CEO in France last August, alex Linton, the president of the newly formed Session Technology Foundation, which will publish the Session app from Switzerland, told 404 Media in a statement quote Ultimately, we were given the choice between remaining in Australia or relocating to a more privacy-friendly jurisdiction such as Switzerland. The app will still function in Australia, but we won't. Okay. So I wasn't aware of the S session messaging app at all until I picked up on the news of this departure, but it looks quite interesting and I wanted to put it on everyone's radar. Well-proven signal protocol which Session forked on GitHub, with the distributed IP-hiding, tor-style onion routing which we briefly discussed again recently. And, on top of all, that Session is 100% open source and, as I mentioned, all of it lives on GitHub.

Since all of this piqued my curiosity, I tracked down a recent white paper describing Session which was written in July of this year. It's titled Session End-to-End Encrypted Conversations with Minimal Metadata Leakage, and that's the key. The white paper's abstract describes Session in a couple sentences. It says Session is an open source, public key based, secure messaging application which uses a set of decentralized storage servers and an onion routing protocol to send end to end encrypted messages with minimal exposure of user metadata. It does this while providing the common features expected of mainstream messaging applications, such as multi-device syncing, offline inboxes and voice-slash-video calling. Huh, okay, well, I would imagine that the Australian feds were probably left quite unsatisfied by the answers anyone knowledgeable of Session's design would have provided to them during their visit in the evening. They would have explained that Session's messaging transport was deliberately designed, like TORS, to hide each endpoint's IP address through a multi-hop, globally distributed server network. Content of the messages used the impenetrable signal protocol used by Signal and WhatsApp to exchange authenticated messages between the parties. And if this didn't already sound wonderful, listen to the system's mission statement from the White Paper's introduction. They said Over the past 10 years there's been a significant increase in the usage of instant messengers. They said have been widely discussed.

Most current methods of protecting user data are focused on encrypting the contents of messages, an approach which has been relatively successful. The widespread deployment of end-to-end encryption does increase user privacy. However, it largely fails to address the growing use of metadata by corporate and state-level actors as a method of tracking user activity. In the context of private messaging, metadata can include the IP addresses and phone numbers of the participants, participants, the time and quantity of sent messages and the relationship each account has with other accounts. Increasingly, it is the existence and analysis of this metadata that poses a significant privacy risk to journalists, protesters and human rights activists.

Session is, in large part, a response to this growing risk. It provides robust metadata protection on top of existing cryptographic protocols, which have already been proven to be effective in providing secure communication channels. Session reduces metadata collection in three key ways. First, session does not require users to provide a phone number, email address or any other similar identifier when registering a new account. In fact, no identifier, I've done it Instead. Pseudonymous, public-private key pairs are the basis of an account's identity and the sole basis. Secondly, session makes it difficult to link IP addresses to accounts or messages sent or received by users through the use of an onion routing protocol Same thing Tor does. And third, session does not rely on central servers. A decentralized network of thousands of economically incentivized nodes performs all core messaging functionality. For those services where decentralization is impractical, like storage of large attachments and hosting of large group chat channels, session allows users to self-host infrastructure and rely on built-in encryption and metadata protection to mitigate trust concerns. In other words, wow.

As we know, pavel Durov, the telegram guy, freed himself by agreeing to, where warranted, share IP addresses and whatever other metadata Telegram collected with law enforcement, and we know that Apple, signal and WhatsApp all similarly keep themselves out of hot water with governments and law enforcement by cooperating to the degree they're able to, and they are able to. They're able to provide IP addresses and related party identifiers. They may not be able to peer into the content of conversations, but the fact of those conversations and the identity of the parties conversing is knowable, shared and shareable. It occurred to me since I put this down. Another perfect example of the power of metadata is cryptocurrency and the blockchain. Much was made of the fact that, oh, it's completely anonymous, don't worry about this, it's just a little. You know, you have your key in the blockchain. All transactions are anonymous. Well, we know how well that worked right. We're able to see money moving and perform associations when it comes out of the cryptocurrency realm. So, again, we're not able to see who, but there's metadata that's being left behind.

Session was created to as to every degree possible, also prevent metadata leakage, to deliberately create a hyper-private messaging system. Saw the clear handwriting on the wall and decided after that visit from their local feds that they would need to move from Australia sooner or later, so it might as well be sooner. The messaging app again is called Session, and it's available in several flavors for Android and iOS smartphones, as well as for Windows, mac and Linux desktops. From here, it appears to be a total win. Establishing an anonymous identity with a public-private key pair is exactly the right way to go, and that's exactly what they do, plus much more, and with all their source code being openly managed on GitHub. In addition to the 34-page technical white paper, there's also a highly accessible five-page light paper, as they called it, which carries their slogan Send Messages, not Metadata. So the URL once again is getsessionorg, where you'll find a software download page. It's at getsessionsorg slash download, as well as links to both that five-page light paper and the full 34-page white paper. So it looks like a complete win to me.

1:16:39 - Steve Gibson

So I have a couple of questions for you about this, prompted by some folks in our YouTube chat. We have chat now in eight different platforms, so I'm trying to monitor it all.

1:16:54 - Leo Laporte

Are they all merged?

1:16:55 - Steve Gibson

Yeah, I have a merged view, so I can see it. Imran in our YouTube chat says so, I can see it. Imran in our YouTube chat says note the session has removed perfect forward secrecy and deniability from the signal protocol. And they did that a few years ago. They say you don't need PFS because that would require full access to your device and if you had full access, you know really, the jig is up no matter what and deniability is not necessary because they don't keep any metadata about you, so that you don't have to worry about that. Does that seem true to you? I think that's correct.

1:17:31 - Leo Laporte

The concern with perfect forward secrecy is that the NSA is filling that large server farm. They're saving it all.

Yes, that massive data warehouse, and at some point in the future, if and when we actually do get quantum computing able to break today's current public key technology, then they could retroactively go back and crack that. Unfortunately, or fortunately rather, signal has already gone to post-quantum technology. Re-keying cuts off somebody who manages to penetrate public key technology for the duration of your use of that key. That allows them to get the symmetric key and decrypt that chunk of conversation. It's not clear at all today whether there's ever going to be a way to do that if anybody, for anybody using the signal protocol where you're using both pre and post quantum public key technology.

so so it doesn't really matter they needed to do that in order to add the features that they wanted to right. That makes sense, makes sense Okay, I'll try it.

1:19:03 - Steve Gibson

I mean the only real disadvantage is that you'll be the only one in your family using it.

1:19:08 - Leo Laporte

Can you? Yes, and so it would be where you have a situation where you have some specific people that you want to have a really guaranteed private conversation with, to have a really guaranteed private conversation with. It's not going to be like, oh, what's your signal handle and then add them to signal. But still, for somebody who really wants true private communications, this goes further now than anything we've seen so far. They've got the state-of-the-art best messaging encryption technology in Signal, married to Onion Routing, which is quite clever, and no server has the full message.

1:19:52 - Steve Gibson

That's the interesting thing, right, right.

1:19:54 - Leo Laporte

Yeah.

1:19:55 - Steve Gibson

Right, it's completely decentralized. I think that's so cool and I always bothered me that Signal wanted my phone number. I just it didn't feel like that. Yeah, and I think they've announced they're backing away from that now yeah, they're going to have usernames. They keep saying that, yeah.

1:20:08 - Leo Laporte

Yeah, and it's like okay, you know, and will it be a many to many? I know that I was able to have it on one phone and one desktop, but I wasn't able to have it on two phones and multiple desktops, so you know some arbitrary limitations. I downloaded this thing and it's on all of my phones and desktops and they found each other With the same private key.

1:20:33 - Steve Gibson

Yes, so you're able to propagate it to other devices.

1:20:36 - Leo Laporte

Yes, oh, that's cool. Yes, when you create it, it gives you a QR code. It shows it to you in hex and in that word salad form you know bunny gopher lawn artichoke asparagus, and so I copied that and then pasted that into a different device and it found me. It said oh, here you are, and it got my picture and everything worked.

1:21:01 - Steve Gibson

I'm going to install it right now. It said, oh, here you are, and it got my picture and everything worked.

1:21:03 - Leo Laporte

I'm going to install it right now. It's slick, and again, all three desktop platforms Windows, mac and Linux, and both phone platforms. Nice, let's take another break. Reliability, briefly, and then close the loop and plow into this question of the future of the internet. And does anyone ever even care?

1:21:30 - Steve Gibson

about IPv6 anymore. Wow, you know we used to have Vince Cerf on and he was like banging the drum. We're going to run out of IP addresses. We're going to run out of IP addresses. We've got to go to.

1:21:41 - Leo Laporte

IPv6. What's really interesting is the chart of the US adoption. We'll get there.

1:21:46 - Steve Gibson

Oh, I can't wait. All right, but right now a word from our sponsor, the folks, the great folks. I've talked to them at ThreatLocker. Threatlocker is a very, very good way to do endpoint security, and I'll explain how in just a second. But first let me ask you a rhetorical question, because I already know the answer. Do zero-day exploits and supply chain attacks keep you up at night? They do, if you're in charge of the network, right. Well, worry no more, because you can harden your security I mean, really harden it with ThreatLocker.

Worldwide companies like JetBlue, for instance, trust ThreatLocker to secure their data and keep their business operations flying high. If you will, the key with ThreatLocker is it's zero trust, which is such a clever way to handle a very big issue, very big issue. Imagine taking a proactive and these are the key words here deny by default, deny by default approach to cybersecurity. You block every action, you block every process, you block every user, unless authorized by your team. Threat Locker helps you do that and provides a full audit of every action, which is really great to have for risk management and compliance. Of course, their 24-7 US-based support team fully supports onboarding and beyond. They'll be with you every step of the way you can stop the exploitation of trusted applications within your organization and keep your business secure and protected from ransomware, all using a very simple concept zero trust.

And we're talking about SolarWinds. Listen to this, steve. Organizations across any industry can benefit from ThreatLocker's ring fencing. Ring fencing isolates critical and trusted applications from unintended uses weaponizations. It limits attackers' lateral movement throughout their network. Threatlocker's ring fencing was able to foil a number of attacks that were not stopped by traditional EDR, and specifically that Solar Winds Orion attack we were talking about, foiled by ring fencing. And if you think about it, it makes sense, because you're blocking that lateral movement without explicit authorization. Oh, and ThreatLocker works for Macs too, so it doesn't matter what you've got on your network. Threatlocker can help you keep it safe, get unprecedented visibility and control of your cybersecurity quickly, easily and cost-effectively.

Threatlocker's Zero Trust Endpoint Protection Platform offers a unified approach to protecting users, devices and networks against the exploitation of zero-day vulnerabilities. This is such a great solution. Get a free 30-day trial. Learn more how ThreatLocker can help mitigate unknown threats and, at the same time, ensure compliance. Visit ThreatLockercom. Easy to remember You're putting everything inside the ThreatLocker ThreatLockercom. We thank them so much for their support. We've talked about zero trust on the show before, and it's clear how this helps. But now there's an easy way to do it and, by the way, it can be very inexpensive too, so you should check it out. It's not just for big companies. You can do it with a small company too, very easily. Threatlockercom. Stephen, your turn.

1:25:12 - Leo Laporte

So thank you. The EU's proposed wholesale revision of the software liability issue has, not surprisingly, drawn a huge amount of attention from the tech press. We gave it enough attention here last week, but I was glad to see that I didn't misstate or misinterpret the effect and intent of this new EU directive. It really is what it appears to be. One reporter about this wrote the EU and US are taking very different approaches to the introduction of liability for software products. While the US kicks the can down the road, the EU is rolling a hand grenade down it to see what happens. Under the status quo, the software industry is extensively protected from liability for defects or issues, and this results in I love this systemic underinvestment in product security. Authorities believe that by making software companies liable for damages when they peddle crapware, those companies will be motivated to improve product security, and of course, we can only hope. I also wanted to share part of what another writer wrote for the record. He wrote six years after Congress tasked a group of cybersecurity experts US Congress with reimagining America's approach to digital security. Get this Virtually all of that group's proposals have been implemented, but there's one glaring exception that has especially bedeviled policymakers and advocates A proposal to make software companies legally liable for major failures caused by flawed code. Software liability, he writes, was a landmark recommendation of the Cyberspace Solarium Commission, a bipartisan team of lawmakers and outside experts that dramatically elevated the government's attention to cyber policy through an influential report that has seen roughly 80% of its 82 recommendations adopted. Recent hacks and outages, including at leading vendors like Microsoft and CrowdStrike, have demonstrated the urgent need to hold software companies accountable, according to advocates for software liability standards. But despite the Solarium Commission's high-profile backing and the avowed interest of the Biden administration, this long-discussed idea has not borne fruit. Interviews with legal experts, technologists and tech industry representatives reveal why Software liability is extremely difficult to design with multiple competing approaches, and the industry warns that it will wreck innovation and even undermine security.

Jim Dempsey, senior policy advisor at Stanford University's Program on Geopolitics, technology and Government, said quote the Solarium Commission and Congress knew that this was going to be a multi-year effort to get this done. This is a very, very, very hard problem. Unquote. A recent spate of massive cyber attacks and global disruptions, including the SolarWinds supply chain attack, the MoveIt ransomware campaign, the Avanti hacks, the CrowdStrike outage and Microsoft's parade of breaches, has shined a spotlight on the world's vulnerability to widely distributed but sometimes poorly written code. Dempsey added quote there's a widespread recognition that something's got to change. We're way too heavily dependent on software that has way too many vulnerabilities unquote.

The software industry's repeated failures have exasperated experts who see little urgency to address the roots of the problem, but bringing companies to heal will be extremely difficult. An associate professor at Fordham School of Law who specializes in cybersecurity and platform liability said quote we have literally protected software from almost all forms of liability comprehensively since the inception of the industry decades ago. It's just a golden child industry. Virtually all software licenses contain clauses immunizing vendors from liability. Policymakers originally accepted this practice as the cost of helping a nascent industry flourish, but now that the industry is mature and its products power all kinds of critical services, it will be an uphill battle to untangle what Dempsey called quote the intersecting legal doctrines that have insulated software developers from the consequences. So, leo, we this podcast certainly have not been alone in just observing over the last 20 years that we've been doing this. Like this is wrong, this has to change. But like this is wrong, this has to change. But also, change is hard, in other words, ipv6. No, okay, one last little point that I thought was interesting.

As we know, a recurring event in security news recently has been the industry's inadvertent hiring of fake IT workers, generally those purporting to come well, purporting to be domestic, but actually it turns out working and working for North Korea, or at least North Korean interests, korean interests. Hopefully this has not been happening for long undetected, since there really seems to be a lot of it going around. Maybe we're just suddenly shining a light on it, so we're seeing a lot of it. I shared the hoops that I had to jump through recently during that one-way video conference with a DigiCert agent, following his instructions as I moved my hands around my face, just holding up the government-issued ID card and demonstrating that I was me. As far as I know, the coverage of this has not actually revealed. The coverage of this has not actually revealed that is, the coverage of the North Korean identity spoofing hasn't actually revealed any malfeasance on the part of these North Korean employees before now. It is certainly illegal to hire them, but they were faking their identities. It turns out that's changed. The creator of a blockchain known as Cosmos, the Cosmos blockchain has admitted that the company inadvertently hired a North Korean IT worker. The company said the FBI notified the project about the North Korean worker. Notified the project about the North Korean worker, but get this the individual at the company who received the notification did not report the incident to his managers. What and? Moreover, cosmo says that the code contributed by the North Korean worker did contain at least one major vulnerability. The company is now performing a security audit to review all the code for other issues. So we can only hope that these now continuing revelations will lead to many more real-time video conferences, such as the one that I had with DigiCert to prove that I was actually me, you know just forwarding a file with some headshots. That's not going to do it any longer.

Oh and I mentioned this at the top, a listener suggested something I hadn't thought of before. His name is Brian. He said please add me to your SecurityNow podcast GRC list. He said I'm an occasional listener and appreciate all of your information and tips. Shared Regards, brian. That's all he said, but I wanted to share this because Brian is a mode of listener who can obtain a value from GRC's weekly podcast synopsis mailing that I'd never considered. I often do hear from listeners who have fallen behind in listening or who aren't always able to find the time to listen. So Brian's note made me realize that the weekly mailings which, as I said at the top of the show, went out to 11,717 people yesterday afternoon in this case, can come in quite handy when making a determination about how to invest one's time. You look at the list, you go, ooh, there are a couple of things here that I want to hear about, and then you grab the podcast. So thank you, brian, for the idea.

1:34:52 - Steve Gibson

It's good for us to remember there are people who don't listen to every single show. Yes, I think that's an excellent point yeah.

1:34:59 - Leo Laporte

In this day and age there is a lot of competition for people's time and attention.

1:35:04 - Steve Gibson

It always worried me. You know that people would give up on the show if they couldn't listen to everyone. You know I stopped subscribing to the New Yorker because it ended up being such a big pile of magazines and there's this guilt like you have to read every issue instead of just dipping into it. So don't feel guilty.

1:35:35 - Leo Laporte

It's okay to not listen to every episode, have two, one's only for Security Now listeners, and the other is just general GRC news, I'm sure, since I'll be talking about anything I'm doing at GRC. Oh, speaking of which, I forgot to mention that because I finished the podcast yesterday afternoon. Yesterday evening I updated GRC's technology for four digits of podcast numbering, so when we go from 999 to 1000, everything should work smoothly. So that is now in place. So, ok, two more pieces. Martin in denmark said hi, steve, love the podcast. Been with you guys from episode zero. Unfortunately, I do wish we'd started zero, leo, just didn't occur to me. You know we were green back then we know we were newbies.

I thought, um, we're never going to get to 999, we're not even get to get to 300. So here we are.

1:36:44 - Steve Gibson

I just want to point out as a coder, there's a language that I love in every respect, called Julia. My biggest complaint is it counts arrays from one, not zero, and I feel like I'm sorry, I can't do that. I just can't do that. In every other respect it's a wonderful language, but that's a bridge too far. Yeah, it's supposed to be an offset not a number, exactly, and so is it a number or an offset.

1:37:10 - Leo Laporte

Right. Oh well. So Martin in Denmark says I have a question about the stuff Spinrite does when quote speeding up an SSD, unquote. He said my computer is due for a reformat and a reinstall of Windows. Windows is slowing down as it does, but it seems worse than usual. He has in quotes. So I think my SSD could use a little help, since I'm going to nuke the drive anyway. Is there a way to do the same stuff that Spinrite does without Spinrite? He said I assume that using Windows installer or disk part to clean the disk just wipes the file system, slash partition table and does nothing else. Am I right that a poor man's solution would be to delete the partitions on the drive and make a new one and then fill it with random data? I don't own Spinrite, he said. Parens money reasons, yada, yada, and was just wondering if there's another way, as I don't care about the data on the drive. Regards Martin in Denmark.